Problem Statement

Infra complexity scales up. Team size doesn’t.

As a result, engineers spend most of their cycles on repetitive tasks: restarting pods, remediating drift, and maintaining runbooks, while important work stalls. DuploCloud’s AI DevOps Engineers absorb these routine operations inside your cloud, letting your team redirect their time toward architecture, reliability, and delivery.

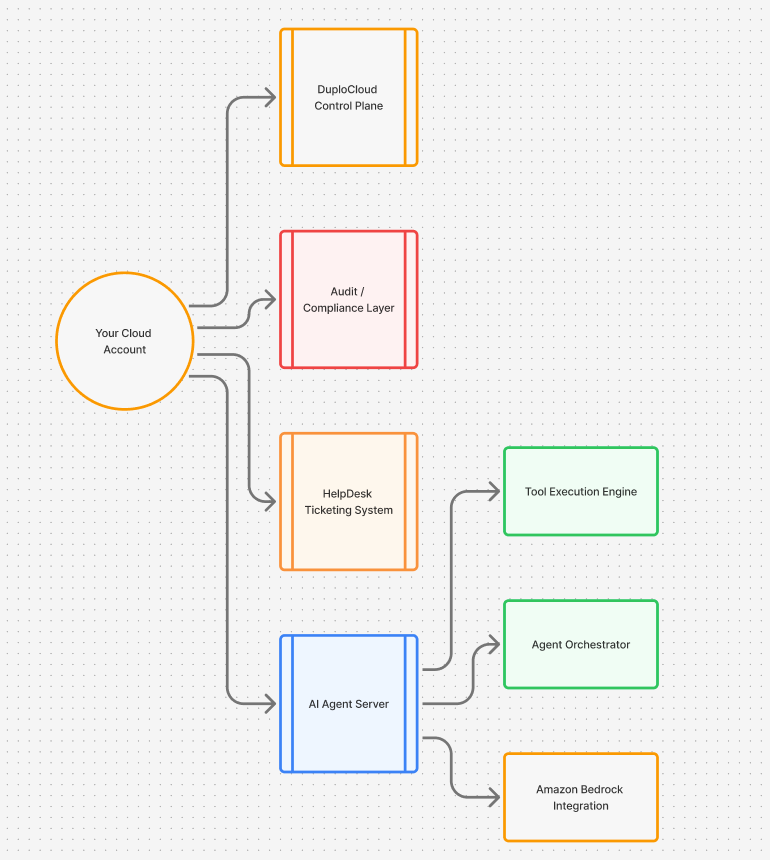

The Architecture

DuploCloud’s AI Agents are autonomous DevOps engineers that run entirely within your cloud account. We currently have these Agents deployed across 34 organizations in GRC, healthtech, SaaS, and government sectors.

They handle infrastructure tasks through a ticketing interface. And they always have human oversight.

Deployment Architecture

Here’s a sampling of what our deployment architecture looks like in the real world:

Deployment architecture:

- Runs on k8s instance in your VPC

- Has no external API calls

- Ensures that all data processing happens in your infrastructure

- Supports air-gapped/private cloud deployments

- Integrates with AWS and runs LLMs in your SOC2 or PCI -eligible Bedrock environment. This automates infrastructure, security, and compliance while keeping your data private. It’s never used for model training.

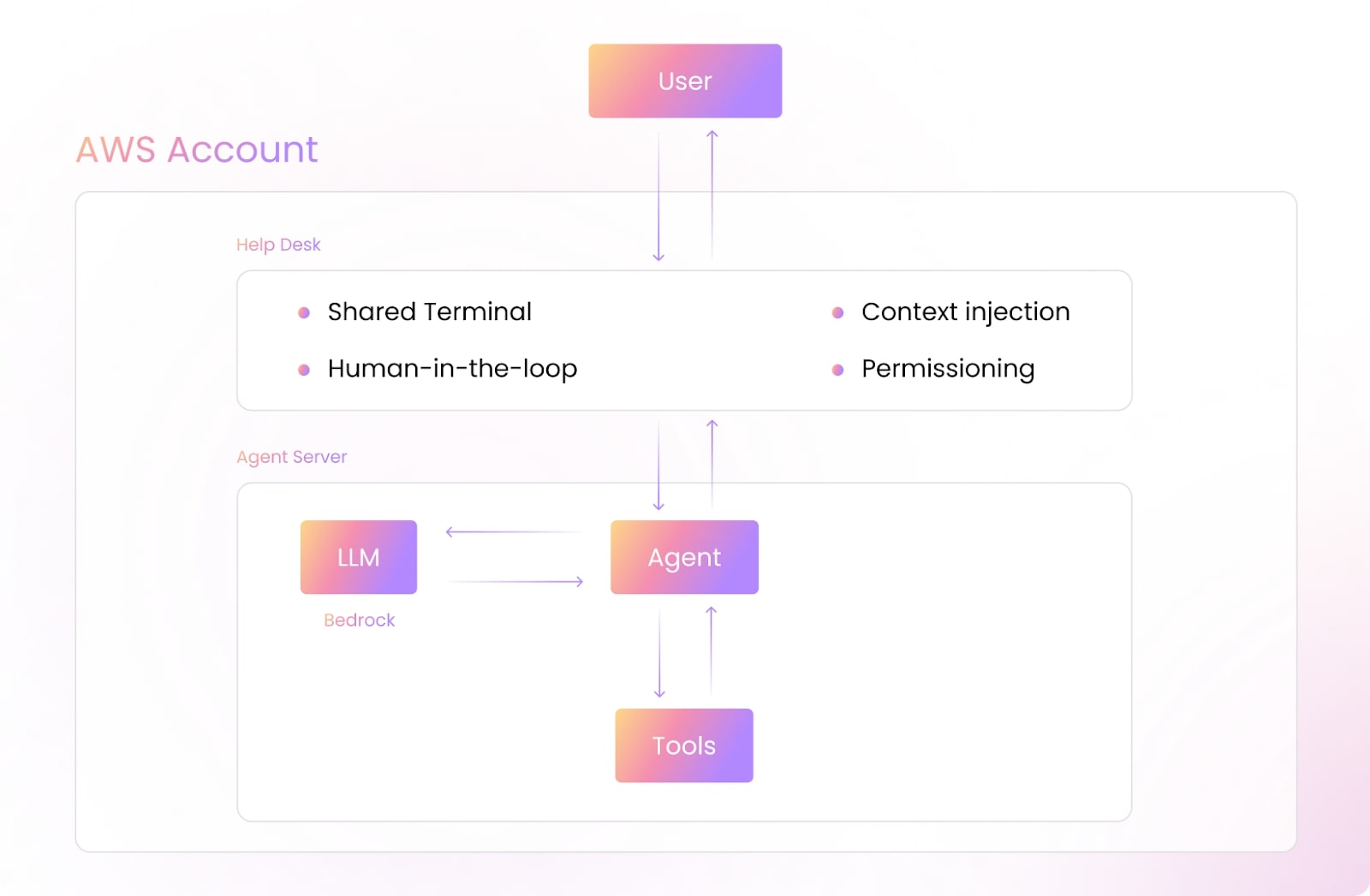

How the Deployment Architecture Works

Okay, so you’re getting prebuilt, production-ready Agents that handle common DevOps and infrastructure management tasks. The Agents integrate with your existing DuploCloud infrastructure without friction. We can deploy them immediately to automate routine operations and troubleshooting workflows.

Our Agents will work within DuploCloud’s secure Tenant architecture. They inherit user permissions and maintain compliance with your organization’s security policies.

They can be accessed through Slack, HelpDesk interface or IDE. There, you can create Tickets and collaborate with AI Agents to resolve issues or perform tasks for you.

Here, you’ll see a sample code block:

# Example ticket flow

1. User creates ticket: "Production API returning 502 errors"

2. Ticket assigned to Observability Agent

3. Agent analyzes (using Bedrock Claude/Llama):

- Pulls Grafana metrics

- Checks pod status via kubectl

- Reviews recent deployments

4. Agent proposes action: "Scale API pods from 3 to 5"

5. Human reviews and approves

6. Agent executes: kubectl scale deployment api --replicas=5

7. Agent monitors and reports results

Wanna See This in Real Time? Look out for our sandbox soon to try these new agents.

Simply deploy the same agents (Kubernetes, Observability, CI/CD) inside your own cloud account. Then, watch them handle real tickets end-to-end.

Try the Sandbox:

The Six AI DevOps Engineers

Each agent performs a specialized DevOps role:

Architecture Agent – The Documentation Engineer

Architecture Agent – The Documentation Engineer

- Tools: Neo4j queries, AWS API calls, kubectl

- Purpose: Maintains real-time infrastructure documentation and dependency maps.

- How it works: Continuously crawls cloud resources and configuration, builds a live graph in Neo4j, and regenerates diagrams whenever something changes so docs never drift from reality.

- Example request: “Show all services dependent on RDS instance prod-db.”

- Output: Up-to-date Mermaid diagrams and markdown docs generated directly from the live infrastructure state, not stale wiki pages.

Kubernetes Agent – The Platform Engineer

- Tools: Full kubectl access (within user permissions and with a human in the loop)

- Purpose: Handles day-to-day cluster operations and first-line troubleshooting.

- How it works: Watches cluster events, correlates logs and metrics, then suggests runbook steps; with one click, it can run the kubectl commands on your behalf.

- Example request: “Investigate crashlooping pods in prod.”

- Actions: Identifies failing pods, surfaces the likely root cause, proposes a remediation plan (restart, roll back, or scale out), and executes once you approve.

CI/CD Agent – The Release Engineer

- Tools: Jenkins API, GitHub Actions API

- Purpose: Pipeline failure resolution and pipeline optimization

- How it works: Subscribes to pipeline events, classifies failures, compares them to a library of past incidents, and proposes a fix or rollback when the remediation is deterministic.

- Integration: Webhooks auto-create tickets on pipeline failure

- Example Task: “Fix failing build #1234 in payment-service”

- Actions: Pinpoints the failing step, surfaces root cause, drafts or applies a patch/rollback, then re-runs the pipeline once you approve.

Observability Agent – The SRE

- Tools: Grafana API, OpenTelemetry queries

- Purpose: Monitoring and incident response

- How it works: Continuously queries logs, metrics, and traces, correlates spikes across services, and maps them back to recent deploys or infra changes.

- Example Task: “Analyze 500 errors spike from last hour”

- Actions: Clusters similar errors, identifies the probable culprit (service, commit, or dependency), and proposes concrete remediation steps or runbook actions.

Cost Optimization Agent – The FinOps Engineer

- Tools: AWS Cost Explorer, resource tagging APIs

- Purpose: Cloud spend analysis and optimization

- How it works: Pulls detailed cost and usage data, groups it by tags / accounts / services, then runs rules to detect idle, over-provisioned, or poorly reserved resources.

- Example Task: “Identify unused EBS volumes over 30 days old”

- Actions: Produces a prioritized savings report with estimated monthly impact and can generate change sets or tickets to right-size or decommission resources.

PrivateGPT Agent – The Compliance Analyst

- Tools: AWS Bedrock (Claude models)

- Purpose: Secure document/code analysis

- How it works: Runs LLM analysis inside your own environment, restricting data to approved repositories and applying policy checks before any output leaves the sandbox.

- Example Task: “Review my expense data for Denver”

- Actions: Flags policy or PII issues, summarizes key findings, and produces redlined recommendations you can feed into your compliance workflows.

Explore the agents hands-on

You’ll get a clean environment to experiment with commands, approvals, and audits. And you’ll never deal with setup friction.

Start Sandbox Session

See the CI/CD Agent troubleshoot a build

Security & Compliance

Compliance Standards Supported:

- SOC 2 Type II

- HIPAA

- PCI-DSS

- NIST 800-53

- FedRAMP

Security Controls:

Here’s a sample of how yaml runs:

Access Control:

- RBAC inheritance from DuploCloud tenants

- No standalone service accounts

- JIT access for privileged operations

Audit:

- Every agent action logged with:

- User who created ticket

- Agent decisions

- Tools executed

- Human approvals

- Logs shipped to your SIEM (Splunk/DataDog/CloudWatch)

Data Protection:

- Encryption at rest (AES-256)

- TLS 1.3 for all communications

- No data leaves your cloud account

Obviously, security is foundational.

Obviously, security is foundational.

DuploCloud’s AI agents operate under strict governance. We make sure that autonomy never compromises control. Access is tightly managed through Role-Based Access Control (RBAC) inherited directly from DuploCloud user.

This eliminates the need for standalone service accounts that could introduce risk. For high-impact operations, Just-In-Time (JIT) access is enforced.

Privileges are granted only when required, for the exact duration needed, and automatically revoked upon completion.

Every action taken by an AI DevOps Engineer is fully auditable. From the moment a user creates a ticket, the system logs the full decision chain:

- Agent reasoning

- Tools invoked

- Any human approvals required

These immutable records are automatically forwarded to your existing SIEM environment, whether Splunk, DataDog, CloudWatch, or DuploCloud’s Observability Suite. This allows for seamless integration into your compliance and monitoring workflows.

Data sovereignty and protection are non-negotiable.

All data at rest is secured with AES-256 encryption, while TLS 1.3 safeguards every communication in flight.

Most importantly, no data ever leaves your cloud account. Your infrastructure remains the single source of truth. So you’ll have full visibility and control over autonomous operations.

Deployment Process

Here’s a sample of what code looks like in the real world:

# 1. Prerequisites

- DuploCloud platform deployed

- Amazon Bedrock enabled in your AWS account

- Kubernetes 1.24+ (for K8s agent)

- Terraform 1.0+ (for IaC)

# 2. Infrastructure as Code deployment

terraform apply -var="enable_agents=true" \

-var="agent_types=[kubernetes,architecture,observability]"

# 3. Configuration

duploctl config set agent.bedrock.model "claude-3-sonnet"

duploctl config set agent.approval.required "true"

duploctl config set agent.audit.destination "s3://audit-bucket"

# 4. Access points

- Browser: https://your-duplo.domain/helpdesk

- Slack: /duplo command integration

- VS Code: DuploCloud extension with agent access

Interfaces and Workflow

The Three Ways to Interact

- Web Interface: Full HelpDesk with ticket history, approvals, audit trail

- Slack: /duplo create-ticket “Check cluster health”

- VS Code: Right-click on k8s manifest, and then “Ask Kubernetes Agent”

Usage Model

Usage-Based on Tickets: Starts at 200 tickets/month at core plan

What Counts as a Ticket:

- Any user-initiated request

- Auto-created tickets from CI/CD failures

- Scheduled maintenance tasks

What’s Free:

- Agent installation and configuration

- Audit log storage (you pay for your S3/blob storage)

- Integration setup

Current Capabilities & Roadmap

- Language: This application currently supports English. We have multilingual support on our roadmap.

- Cloud Providers: Fully supported on AWS. We’ve got Azure and GCP integrations coming soon.

- Observability: We have distributed traces and spans to enhance your visibility across systems.

- Regions: Our Agents currently operate with multi-region coordination.

- Models: We only support Claude models today, with additional model support expanding.

- Response Time: You can typically count on the first set of token responses streamed to you in less than 5–30 seconds, with ongoing optimization for faster execution.

Compared to Alternatives

| Feature | DuploCloud Agents | GitHub Copilot for CLI | K8sGPT |

| Deployment | Self-hosted | Cloud-only | Self-hosted |

| Multi-tool | Yes (6 agents) | No | K8s only |

| Compliance | SOC2/HIPAA/PCI | Limited | None |

| Human Approval | Built-in | No | No |

| Ticket System | Yes | No | No |

| Cost | Per ticket | Per user | Open source |

FAQ

Can agents access production without approval?

No. You can configure approval requirements based on the environment and action type.

What happens if Bedrock is down?

If Bedrock is down, our Agents will fail safely. Your manual operation will continue normally.

Can we use our own models?

Our roadmap for Q2 2025 includes Ollama/self-hosted model support.

How do you prevent hallucinations?

Our Agents can only execute pre-defined tools with parameter validation. They cannot run arbitrary commands.

Integration with existing tools?

We do have APIs available. Our current integrations are: ServiceNow, PagerDuty, Jira (via webhooks).

How can I see what autonomous DevOps feels like?

Start by running the agents locally. Then, watch how they interact with your existing stack. Finally, decide for yourself where they fit.