Executive Summary

DuploCloud was engaged with its strategic customer, a world’s leading supply chain risk management platform, to migrate their decade old IT infrastructure with a few TBs of data from a private data center to the AWS and Azure cloud. Their hosting costs were reduced from north of $1,000,000 per year to $250,000. DuploCloud successfully completed this project with under 19 hours of effective downtime and three weeks of efficient agile planning and mock drills.

The Challenge

The infrastructure was setup more than a decade ago. At a high level there were 2 main challenges:

- Data Migration with Minimum Downtime: There was TBs of data and this was a 24 x 7 SAAS platform used across the globe with manufactures using it from 5 continents.

- Minimum Cost: The whole purpose of this exercise was cost reduction that had to be balanced with the complexity of a system that was largely undocumented. Client had previously failed several attempts at this migration.

“Most vendors took an infrastructure centric view, while migration challenges are fundamentally at the application layer. Their quotes to transform the app or even own the application migration aspects were prohibitive.”

DuploCloud Approach

The combination of our developer background, an experience of managing millions of VMs across the globe in Azure, and having gone through many of these migrations across various application stacks - our team has a playbook that instills confidence.

“The key to our approach is application first, infrastructure will be weaved by our bots.”

- Application Block Diagram: We identified blocks of application where each block is an independent executable unit. The unit can be a code authored in-house, an ISV product or a SAAS service. We discovered the stack has .NET, SQL, Java, SSIS, SSRS, FTP, Tableau among others.

- Connectivity Matrix: Identified data flow and how the access is granted between various components especially the “hard coded configs”.

- Data: Identified stateful and Stateless Components: This is required to plan data migration and is the primary parameter that governs downtime during migration. Further backup and disaster recovery planning is based off this information. Data was the hardest part as we had TBs for files and SQL data.

- Create infrastructure in Public Cloud: Given our bots this is largely a no-op and is our great differentiation. With bots it is easy for us to keep iterating the topology with no overhead or worrying about securing it. The work is all done implicitly by the bots. For example in this case we were coping data, we would try an S3 bucket then discard it for slowness, create a bastion with rdp copy, create LBs, servers, DBs what not without worrying about AWS nuances..

“We feed in an application topology and an infrastructure is auto-generated that is compliant with best practices. The results are compared with existing infrastructure for sanity.”

Ready to take the next step? Our free ebook will walk you through everything you need for your own cloud migration. Read it now:

Data Migration

- AWS Direct Connect: We got the link with 1 GB capacity tier. We vaguely remember getting 200 MBps of upload.

- File Storage Migration: We had created a file share in a Windows server in AWS. We used “Microsoft Rich Copy” which had the ability to do differential file copies across source and destination based on check sums. We had to run it for several weeks syncing data before the actual swap.

- SQL Migration : For SQL we took a weekly backup and added differential backups every day. We realized the daily differential was also too big so we made it twice a day. Several hours before the planned downtime we started copying the weekly backup followed by the hourly back. Once this was complete, we turned off the system and took another differential backup. Our last bi-daily backup was corrupted and all the other joys. What should have taken 15 mins took 6 hours. Fortunately, we had buffered enough planned downtime.

Main Glitch

We were most nervous about the data but that went all relatively smoothly with the exception of the SQL part and that was minor. After bringing the application live, we were realizing unexpected session timeouts. We discovered that the co-location Load balancer had some features that AWS LB did not seem to have. At first there was a bit of panic among the younger engineers, asking how we could have missed this. But then bouncing across classic, ALB and NLB with some IIS tweaks we settled down on ALB and resolved the issues.

“Our bots were very efficient as we kept asking for different infrastructure feature sets, further that was one area we did not have to worry about their human emotions as we kept asking for changes and working 48 hours straight. The whole DevOps and Security was basically a no-op here.”

In the next few days because of the changing IPs we had issues with some FTP issues with clients and we worked through that.

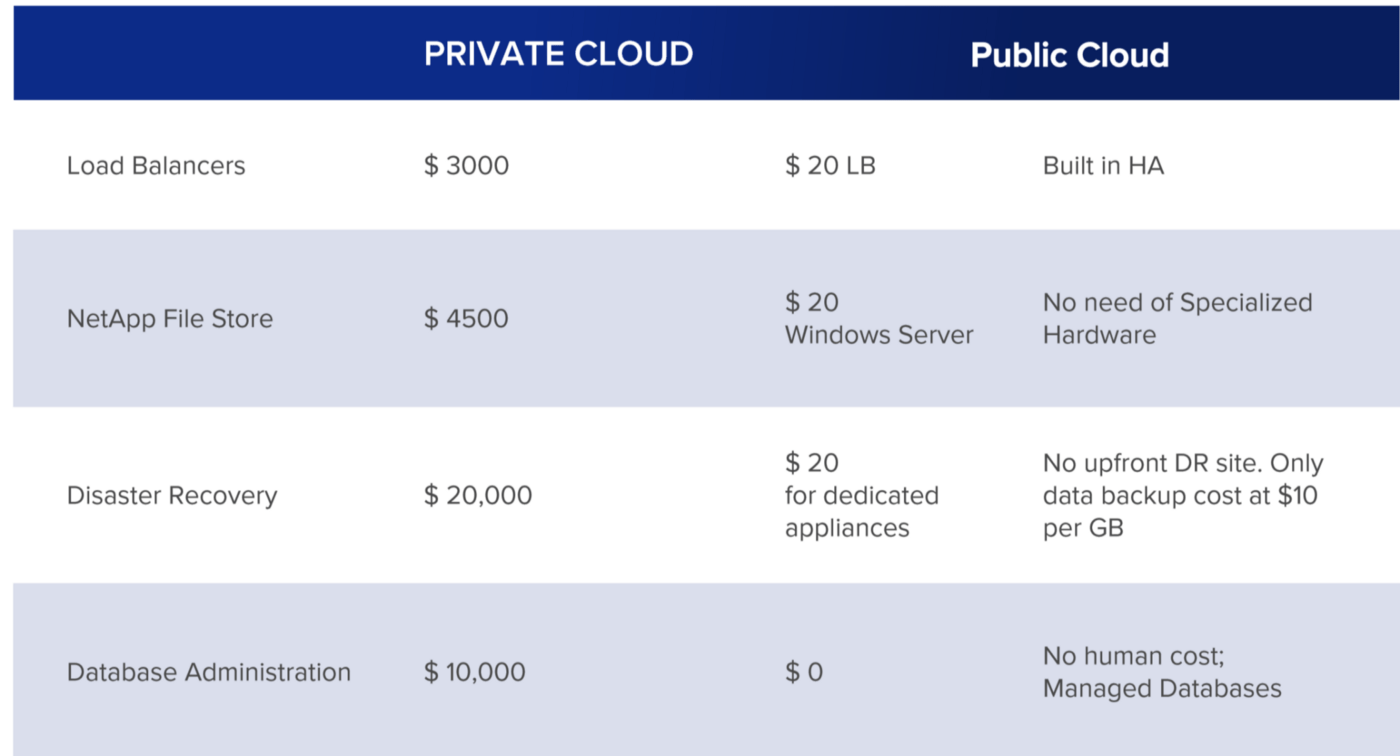

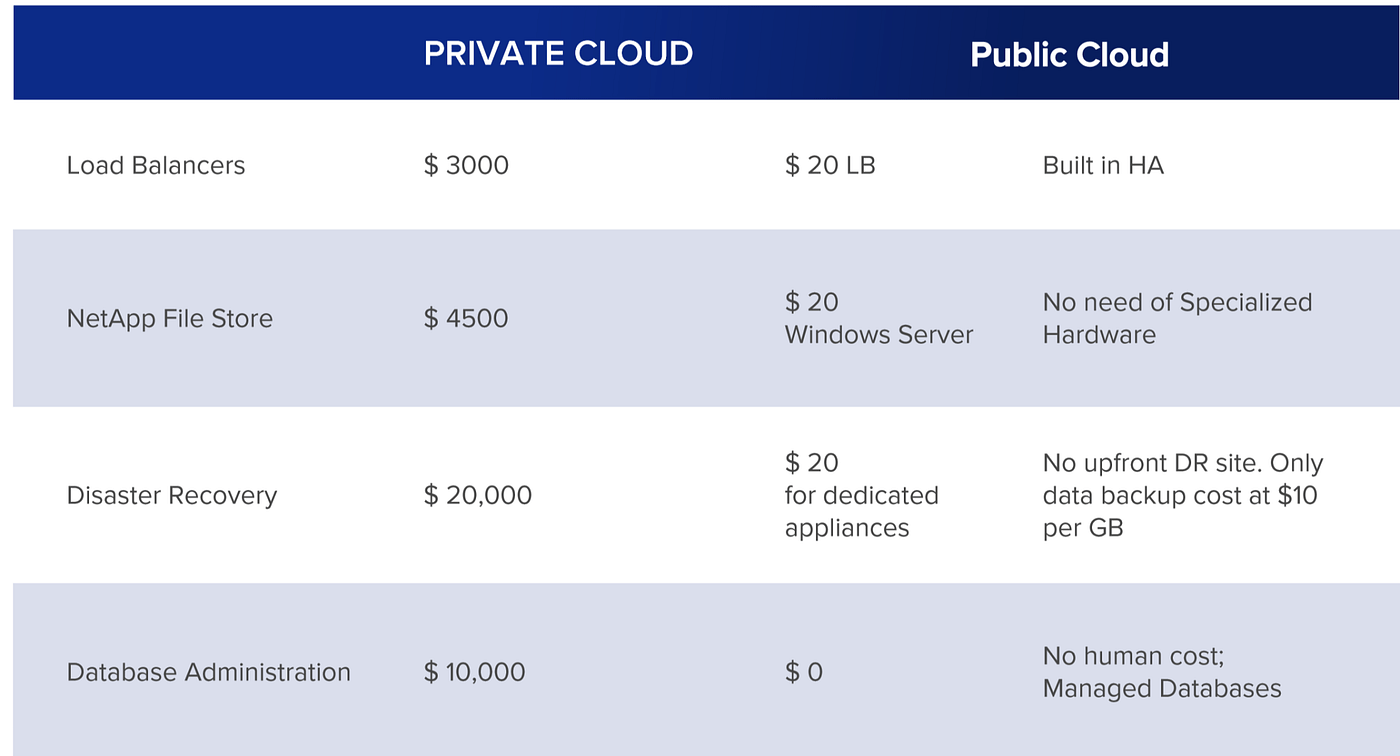

Savings Chart

About 1/3 of the savings came from operations offloaded to bots. Following table shows a comparison of on premise and Cloud offering that helped us realize majority of the capex savings here. Note that in public cloud we fundamentally don’t need to create an active DR site.

RESULT

Post Migration to AWS, the system cost reduced from more than $100,000+ to $20,000 dollars a month. The entire migration was completed within a weekend across global geographies with agile planning for three weeks. The automated bots from DuploCloud made server migration seamless. We enabled SOC2 certification and along with DevSecops services, DuploCloud is also assisting in data archiving of Salesforce data to Azure for the client enabling additional savings. DuploCloud is now the ‘Managed Service Provider’ for the customer’s AWS and Azure infrastructure.