PCI, HIPAA, and HITRUST Compliance with DuploCloud

SECURITY WHITE PAPER

Introduction

Businesses operating in regulated industries are required to abide by a set of guidelines set forth by standard bodies in their respective industries. For example, the Payment Card Industry (PCI) has defined Data Security Standard (DSS), and Health Care has defined HIPAA and HITRUST. SOC 2 is a more generic standard that is widely used in a broad set of industries. There are also guidelines based on the region of operations, such as GDPR. These guidelines, called “controls” are split into IT and non-IT functions (i.e., HR, Finance, Legal). IT controls are split into the following categories:

- Controls implemented by Cloud Provider. Items such as physical datacenter, host virtualization, management software and services provided by the cloud providers. AWS, Azure and GCP meet virtually all the standards and their certifications are readily available for download from their webpages.

- Controls to be implemented by the Organization’s Cloud Ops Team. These pertain to how various cloud provider services are consumed and configured by the organization hosting their application on the cloud. The cloud providers themselves are not responsible for this but do provide a prescriptive set of guidelines on how to implement various controls using their services, community and third-party commercial software available in their marketplace. For example, Operational Best Practices for PCI DSS 3.2.1 - AWS Config (amazon.com)

- Controls to be implemented by the Organization’s product team. Like infrastructure controls, there are a set of guidelines around software development and release procedures that need to be followed by the product development team.

- Controls to be implemented in Organization’s User Device Management Team. These are controls around the use and safety of user devices like company laptops and mobile phones.

Did You Know?

DuploCloud provides a new no-code based approach to DevOps automation that affords cloud-native application developers out-of-box secure and compliant application deployment, 10x faster automation, and up to 70% reduction in cloud operating costs. Click below to get in touch with us and learn more.

The document focuses on Number 2 above and describes the DuploCloud Implementation of various controls. Also provided is an implementation matrix mapping the respective compliance standards to the DuploCloud Implementation.

It is a common misconception that if the cloud provider is meeting a certain compliance guideline, say SOC 2, the organization hosting the application on the provider is automatically fully certified. The cloud is a shared security model, the consumers are responsible to configure the service provided by the cloud vendor to match their security requirements. A simple example is a web application that is exposed to the internet with all ports open. The blame squarely lies with the hosting organization.

DuploCloud Approach

DuploCloud is a DevSecOps software platform which builds and operates a fully compliant infrastructure on your behalf based on the standard of your choice.

DuploCloud is a no-code solution which performs the stitching function underneath DevOps, security tools, and cloud APIs to build and operate a fully compliant and secure infrastructure. Unlike other security or DevOps tools that operators integrate into their infrastructure to perform a specialized siloed function, DuploCloud is fundamentally a labor optimization solution reducing implementation hours from 6 months to one week.

To implement any infrastructure control, the first preference is a native solution by the cloud provider. This constitutes about 90% of the controls which are implemented by orchestrating those feature sets in AWS, Azure, or GCP via APIs. Next, standard community software is considered for any remaining controls. For example, WAZUH as SIEM, ClamAV for antivirus, and Suricata for NIDS. Finally, for remaining controls or based on customer preference for a certain tool, the framework integrates third-party ISV tools. This extensibility is available user-added plugins as well. For example, currently DuploCloud is integrated with Sentry for alerting, Jira for incident management, Sumo Logic for log collection, and SignalFx for metrics.

At an architecture level, DuploCloud operates with the following five declarative specifications:

- Product Architecture

- Availability requirements

- Scale needs

- Compliance Standard (like PCI, HIPAA, SOC 2)

- Cost considerations

Internally, the software is a rules-based engine that combines these requirements with cloud subject matter expertise – IAM, AD policies, security group rules, availability zones, regions, etc. – compliance guidelines – such as separation of production and stage into different networks – and runs all this through a state machine to produce the desired output. The state machine is constantly active post-configuration and reconciles or alerts on any drift. Updates go through the same process.

Self-Hosted

DuploCloud is single tenant software that installs in either your cloud account or in our cloud account dedicated to you. Users interface with software via the browser UI and/or API calls. All data and configuration stays within your cloud account. All configurations that have been created and applied by the software are transparently available to be reviewed and edited in your cloud account. All configuration information and data stays with you and is controlled by you.

Policy Model

DuploCloud exposes a declarative policy model which forms the basis of the implementation. Following is a brief overview. Detailed product documentation is available here: AWS User Guide, Azure User Guide.

- Infrastructure. An infrastructure maps 1:1 with a VPC/VNET and can be in any region. Each infrastructure has a set of subnets spread across multiple availability zones. In AWS there is a NAT gateway for private subnets.

- Tenant or Project. Tenant is the most fundamental construct of the policy model. It represents an application’s entire lifecycle. It is:

- A security boundary i.e., all resources within a tenant have access to each other, but any external access is blocked unless explicitly exposed via an LB, IAM/AD Policy, or SG.

- A container of resources with each resource implicitly tagged with the tenant name and other labels associated with the tenant. Deleting a tenant deletes all the resources underneath. In Azure, a tenant is a resource group.

- An access control boundary i.e., each tenant can be accessed by N number of users and each user can access M tenants. The single sign on access given for a user to a tenant is automatically propagated to provide just-in-time access to the AWS and Azure resources via the console by the software.

- Carries all the logs, metrics, and alerts of the application in a single dashboard.

- Links to the application’s code repository for CI/CD, providing a runtime build as a microservice construct such that each tenant can run its own builds in resources in that tenant without worrying about setting up a build system like Jenkins, etc.

- Part of 1 and only 1 infrastructure. An infrastructure can have multiple tenants.

- Plan. This is a logical construct and a container of tenants. It basically has governance policies for the tenants under it. For example, resource usage quota, allowed AMIs, allowed certificates, labels, etc. Each plan can be linked to one and only one infrastructure.

- User. This is an individual with a user ID. Each user could have access to one or more tenants/projects.

- Host. This is an EC2 instance or VM. This is where your application will run.

- Service. Service is where your application code is packaged as a single docker image and running as a set of one or more containers. It is specified as - image-name; replicas; env-variables; vol-mappings, if any. DuploCloud also allows running applications that are not packaged as Docker images.

- LB. A Service can be exposed outside of the tenantproject via an LB and DNS name. LB is defined as - Service name + container-port + External port + Internal-or-internet facing. Optionally, a wild card certificate can be chosen for SSL termination. You can choose to make it internal which will expose it only within your VPC/VNET to other applications.

- DNS Name. By default, when a Service is exposed via an LB, DuploCloud will create a friendly DNS Name. A user can choose to edit this name. The domain name must have been configured in the system by the admin.

- Docker Host or Fleet Host. If a host is marked as part of the fleet, then DuploCloud will use it to deploy containers. If the user needs a host for development purposes such as a test machine, then it would be marked as not part of the pool or fleet.

Agent Modules

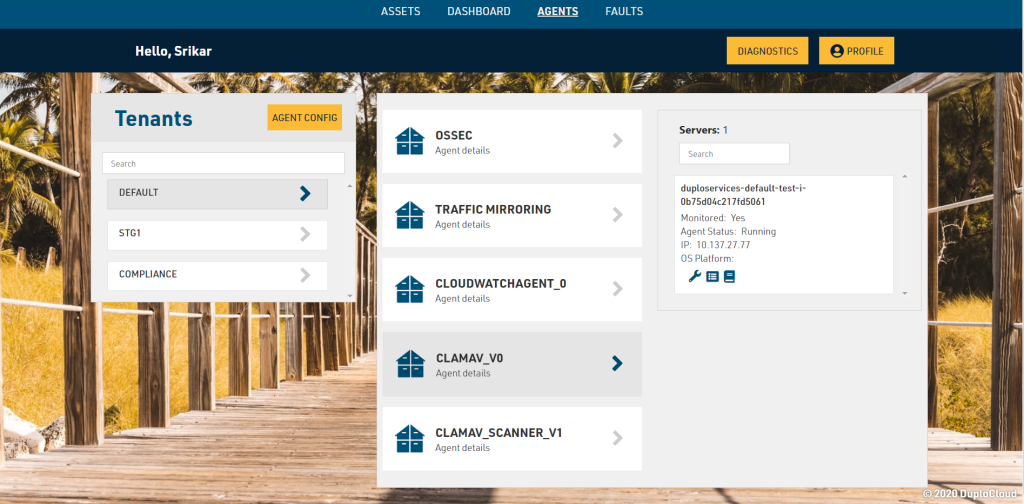

For many of the compliance controls, several agent-based software packages are installed in each VM that is in scope. A few examples are the Wazuh agent to fetch all the logs, ClamAV virus scanner, AWS Inspector that provides vulnerability scanning, Azure OMS and CloudWatch agents for host metrics. While these agents are installed by default, DuploCloud provides a framework where the user can specify an arbitrary list of agents in the following format and DuploCloud will install these automatically in any launched VM. If any of these agents crash, then DuploCloud will send an alert. One good use case is to monitor the health of the ClamAV agent.

In the DuploCloud UI this configuration is under Security → Agents Tab

[

{

"AgentName":"AwsAgent",

"AgentWindowsPackagePath":"https://inspector-agent.amazonaws.com/windows/installer/latest/AWSAgentInstall.exe",

"AgentLinuxPackagePath":"https://inspector-agent.amazonaws.com/linux/latest/install",

"LinuxAgentInstallStatusCmd":"sudo service --status-all | grep -wc 'awsagent'",

"WindowsAgentServiceName":"awsagent",

"LinuxAgentServiceName":"awsagent",

"LinuxInstallCmd":"sudo bash install"

},

{

"AgentName":"ClamAV_v0",

"AgentWindowsPackagePath":"",

"LinuxAgentInstallStatusCmd":"sudo service clamav-freshclam status | grep -wc 'running'",

"AgentLinuxPackagePath":"https://www.google.com",

"WindowsAgentServiceName":"",

"LinuxAgentServiceName":"clamav-freshclam",

"LinuxInstallCmd":"OS_FAMILY=$(cat /etc/os-release | grep PRETTY_NAME); if [[ $OS_FAMILY == *'Ubuntu'* ]]; then sudo apt-get update; sudo apt-get install -y clamav; else sudo amazon-linux-extras install -y epel; sudo yum install clamav clamd -y; sudo service clamav-freshclam start; fi",

"LinuxAgentUninstallStatusCmd":"OS_FAMILY=$(cat /etc/os-release | grep PRETTY_NAME); if [[ $OS_FAMILY == *'Ubuntu'* ]]; then sudo apt-get autoremove -y --purge clamav; else sudo yum remove -y clamav*; fi"

},

{

"AgentName": "clamav_scanner_v2",

"AgentWindowsPackagePath": "",

"AgentLinuxPackagePath": "https://www.google.com",

"WindowsAgentServiceName": "",

"LinuxAgentServiceName": "clamav-freshclam",

"LinuxInstallCmd": "sudo unlink /etc/cron.hourly/clamscan_*; sudo wget -O installclamavcron.sh https://raw.githubusercontent.com/duplocloud/compliance/master/installclamavcron.sh; sudo chmod 0755 installclamavcron.sh; sudo ./installclamavcron.sh",

"LinuxAgentInstallStatusCmd": "ls -la /etc/cron.hourly | grep -wc 'clamscan_v1_hourly'",

"LinuxAgentUninstallStatusCmd": "unlink /etc/cron.hourly/clamscan_v1_hourly"

}

]

Pre-requisite Reading

View the following two videos on DuploCloud’s website to become familiar with the concepts of DuploCloud before reading through the control implementation details.

Explainer video #1

Explainer video #2

More information is available @duplocloud.com

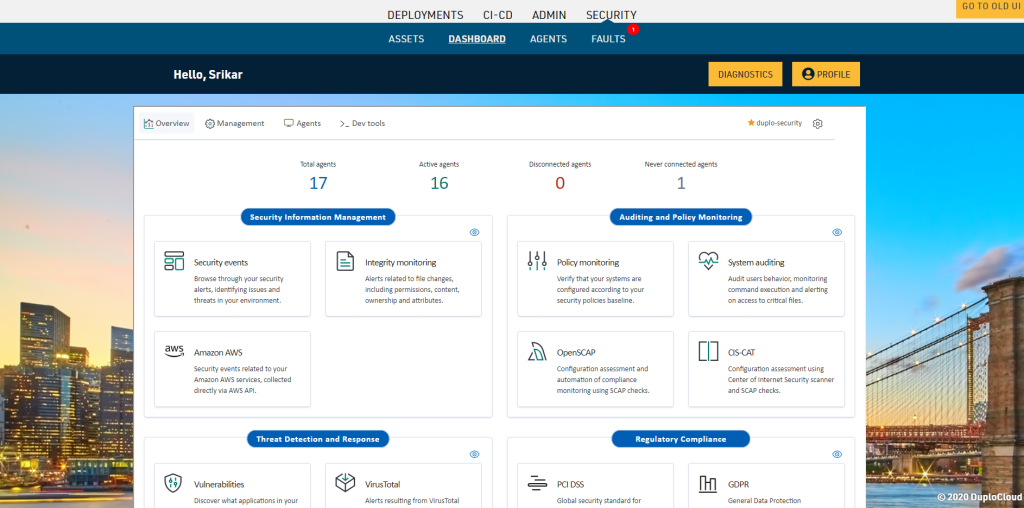

Security Information and Event Management

Every infrastructure has a centralized system to aggregate and process all events. The primary functions of the system are:

- Data Repository

- Event Processing Rules

- Dashboard

- Events and Alerting

Distributed agents of this platform are deployed at various endpoints (VMs in Cloud) where they collect events data from various logs like syslogs, virus scan results, NIDS alerts, File Integrity events, etc. Data is sent to a centralized server and undergoes a set of rules to produce events and alerts that are stored in typically Elasticsearch where dashboards can then be generated. Data can also be ingested from sources like CloudTrail, AWS Trusted Advisor, Azure Security Center and other non-VM based sources.

The strength of an SIEM is fundamentally judged by two factors: Rules set and Data parser. Together these determine the amount of coverage. Wazuh is a fantastic SIEM with the most elaborate coverage. Any required security functionality has its ruleset in Wazuh, be it FIM, CVE, Virus Scanning or CloudTrail. At the same time, the Wazuh platform is extensible, open source and has over 1.5K GitHub stars and 369 GitHub forks. Subsequent sections describe the location of various core modules of our PCI DSS implementation in the Wazuh dashboard.

PCI DSS - Super set of standards

The security guidelines from various standards has a large overlap. Some guidelines are more stringent than others, with PCI DSS and HITRUST the most stringent. Their controls are a super set of controls from most other standards, specifically SOC 2, GDPR and HIPAA. This document describes the controls implemented by DuploCloud mapping to PCI DSS, which subsume SOC 2 GDPR, HIPAA and HITRUST. The controls matrix at the end provides the mapping with PCI-DSS and HIPAA.

Provisioning Time Controls

Network, Security and IAM

|

PCI DSS Requirements v3.2.1 |

DuploCloud Implementation | |

|

Requirement 1: Install and maintain a firewall configuration to protect cardholder data | ||

|

1. |

1.1.4 Requirements for a firewall at each Internet connection and between any demilitarized zone (DMZ) and the Internal network zone |

Infrastructure is split into public and private subnets. Dev, stage, and production are split into different VPCs/VNETs. DuploCloud automation introduces a concept of a tenant which is a logical construct above AWS/Azure and represents an application's entire lifecycle. It is a security boundary implemented by having a unique SG, IAM Role and Instance Profile in AWS/ a Subnet, NSG and Managed Identity in Azure per tenant. By default, no access is allowed into the tenant unless specific ports are exposed via LB. |

|

2. |

1.1.5 Description of groups, roles, and responsibilities for management of network components. |

DuploCloud overlays logical constructs of Tenant and infrastructure that represents an application. Within a tenant there are concepts of services. All resources within the tenant are by default labeled in the cloud with the Tenant name. Further the automation allows user to set any tag at a tenant level and that is automatically propagated to AWS/Azure artifacts. The system is always kept in sync with background threads |

|

3. |

1.2.1 Restrict inbound and outbound traffic to that which is necessary for the cardholder data environment, and specifically deny all other traffic. |

Infrastructure is split into public and private subnets. Dev, stage, and production are split into different VPCs/VNETs. DuploCloud automation introduces a concept of a tenant which is a logical construct above AWS/Azure and represents an application's entire lifecycle. It is a security boundary implemented by having a unique SG, IAM Role and Instance Profile in AWS/ a Subnet, NSG and Managed Identity in Azure per tenant. By default, no access is allowed into the tenant unless specific ports are exposed via LB. |

|

4. |

1.3.1 Implement a DMZ to limit inbound traffic to only system components that provide authorized publicly accessible services, protocols, and ports. |

Infrastructure is split into public and private subnets. Dev, stage, and production are split into different VPCs/VNETs. DuploCloud automation introduces a concept of a tenant which is a logical construct above AWS/Azure and represents an application's entire lifecycle. It is a security boundary implemented by having a unique SG, IAM Role and Instance Profile in AWS/ a Subnet, NSG and Managed Identity in Azure per tenant. By default, no access is allowed into the tenant unless specific ports are exposed via LB. |

|

5. |

1.3.2 Limit inbound Internet traffic to IP addresses within the DMZ. |

Infrastructure is split into public and private subnets. Dev, stage, and production are split into different VPCs/VNETs. DuploCloud automation introduces a concept of a tenant which is a logical construct above AWS/Azure and represents an application's entire lifecycle. It is a security boundary implemented by having a unique SG, IAM Role and Instance Profile in AWS/ a Subnet, NSG and Managed Identity in Azure per tenant. By default, no access is allowed into the tenant unless specific ports are exposed via LB. |

|

6. |

1.3.4 Do not allow unauthorized outbound traffic from the cardholder data environment to the Internet. |

By default, all outbound traffic uses NAT Gateway. We can put in place additional subnet ACLs if needed. Nodes in the private subnets can only go outside only via a NAT Gateway. In Azure outbound can be blocked in the VNET |

|

7. |

1.3.6 Place system components that store cardholder data (such as a database) in an internal network zone, segregated from the DMZ and other untrusted networks. |

Infrastructure is split into public and private subnets. Dev, stage, and production are split into different VPCs/VNETs. DuploCloud automation introduces a concept of a tenant which is a logical construct above AWS/Azure and represents an application's entire lifecycle. It is a security boundary implemented by having a unique SG, IAM Role and Instance Profile in AWS/ a Subnet, NSG and Managed Identity in Azure per tenant. By default, no access is allowed into the tenant unless specific ports are exposed via LB. The application is split into multiple tenants with each tenants having all private resources in a private subnet. An example implementation would be all data stores are in one tenant and frontend UI is in a different tenant |

|

8. |

1.3.7 Do not disclose private IP addresses and routing information to unauthorized parties. |

Use Private subnets and private R53 hosted zones/Private Azure DNS zones |

|

9. |

1.5 Ensure that security policies and operational procedures for managing firewalls are documented, in use, and known to all affected parties |

Usage of a rules-based approach makes the configuration error free, consistent and documented. Further documentation is to be done by the client and we also put in documentation during the blue printing process |

Secret Management

|

PCI DSS Requirements v3.2.1 |

DuploCloud Implementation | |

|

Requirement 2: Do not use vendor-supplied defaults for system passwords and other security parameters | ||

|

1. |

2.1 Always change vendor-supplied defaults and remove or disable unnecessary default accounts before installing a system on the network. This applies to ALL default passwords, including but not limited to those used by operating systems, software that provides security services, application and system accounts, point-of-sale (POS) terminals, Simple Network Management Protocol (SNMP) community strings, etc.) |

DuploCloud enables user specified password or random password generation options. User access is managed in such a way that all end user access is via single sign on and password less. Even access to AWS/Azure console is done by generating a federated console URL that has a validity of less than an hour. The system enables operations with minimal user accounts as most access is JIT |

|

2. |

2.2.1 Implement only one primary function per server to prevent functions that require different security levels from co-existing on the same server. (For example, web servers, database servers, and DNS should be implemented on separate servers.) Note: Where virtualization technologies are in use, implement only one primary function per virtual system component. |

DuploCloud orchestrates K8 node selectors for this and supports non container workloads and allows labeling of VMs and achieve this. For non-container workloads are also supported and hence allows automation to meet this controls. For example, one can install Wazuh in one VM, Suricata in another and Elastic Search in another |

|

3. |

2.2.2 Enable only necessary services, protocols, daemons, etc., as required for the function of the system. |

By default, no traffic is allowed inside a tenant boundary unless exposed via an LB. DuploCloud allows automated configuration of desired inter-tenant access w/o users needing to manually write scripts. Further as the env changes dynamically DuploCloud keys these configs in sync. DuploCloud also reconciles any orphan resources in the system and cleans them up, this includes docker containers, VMs, LBs, keys, S3 buckets and various other resources |

|

4. |

2.2.3 Implement additional security features for any required services, protocols, or daemons that are considered to be insecure. Note: Where SSL/early TLS is used, the requirements in Appendix A2 must be completed. |

DC gets certificates from Cert-Manager and automates SSL termination in the LB |

|

5. |

2.2.4 Configure system security parameters to prevent misuse. |

IAM configuration and policies in AWS/ Managed Identities in Azure that implement separation of duties and least privilege, S3 bucket policies. Infrastructure is split into public and private subnets. Dev, stage and production are split into different VPCs/VNETs. DuploCloud automation introduces a concept of a tenant which is a logical construct above AWS/Azure and represents an application's entire lifecycle. It is a security boundary implemented by having a unique SG, IAM Role and Instance Profile in AWS/ a Subnet, NSG and Managed Identity in Azure per tenant. By default, no access is allowed into the tenant unless specific ports are exposed via LB. The application is split into multiple tenants with each tenants having all private resources in a private subnet. An example implementation would be all data stores are in one tenant and frontend UI is in a different tenant |

|

6. |

2.2.5 Remove all unnecessary functionality, such as scripts, drivers, features, subsystems, file systems, and unnecessary web servers. |

DuploCloud reconciles any orphan resources in the system against the user specifications in its database and cleans them up, this includes docker containers, VMs, LBs, keys, S3 buckets and various other resources. All resources specified by the user in the database are tracked and audited every 30 seconds |

|

7. |

2.3 Encrypt all non-console administrative access using strong cryptography. |

SSL LB and VPN connections are orchestrated. DuploCloud automates OpenVPN P2S VPN user management by integrating it with user's single sign on i.e., when a user’s email is revoked from DuploCloud portal, it is cleaned up automatically from the VPN server |

|

8. |

2.4 Maintain an inventory of system components that are in scope for PCI DSS. |

All resources are stored in DB, tracked, and audited. The software has an inventory of resources that can be exported |

Encryption and Key management

|

PCI DSS Requirements v3.2.1 |

DuploCloud Implementation | |

|

Requirement 3: Protect stored cardholder data | ||

|

1. |

3.4.1 If disk encryption is used (rather than file- or column-level database encryption), logical access must be managed separately and independently of native operating system authentication and access control mechanisms (for example, by not using local user account databases or general network login credentials). Decryption keys must not be associated with user accounts. Note: This requirement applies in addition to all other PCI DSS encryption and key-management requirements. |

DuploCloud orchestrates AWS KMS/Azure Key Vault keys per tenant to encrypt various AWS/Azure resource in that tenant like DBs, S3, Elastic Search, REDIS etc. Access to the keys is granted only to the instance profile w/o any user accounts or keys. By default, DuploCloud creates a common key per deployment but allows ability to have one key per tenant |

|

2. |

3.5.2 Restrict access to cryptographic keys to the fewest number of custodians necessary. |

DuploCloud orchestrates AWS KMS/Azure Key Vault keys per tenant to encrypt various AWS/Azure resource in that tenant like DBs, S3, Elastic Search, REDIS etc. Access to the keys is granted only to the instance profile w/o any user accounts or keys. By default, DuploCloud creates a common key per deployment but allows ability to have one key per tenant |

|

3. |

3.5.3 Store secret and private keys used to encrypt/decrypt cardholder data in one (or more) of the following forms at all times:

Note: It is not required that public keys be stored in one of these forms. |

DuploCloud orchestrates AWS KMS/Azure KeyVault for this and that in turns provides this control that we inherit |

Transport Encryption

| PCI DSS Requirements v3.2.1 | DuploCloud Implementation | |

| Requirement 4: Encrypt transmission of cardholder data across open, public networks | ||

| 1. |

4.1 Use strong cryptography and security protocols to safeguard sensitive cardholder data during transmission over open, public networks, including the following:

Note: Where SSL/early TLS is used, the requirements in Appendix A2 must be completed. Examples of open, public networks include but are not limited to:

| In the secure infrastructure blueprint we adopt Application Load Balancers with HTTPS listeners. HTTP listeners forwarded to HTTPS. The latest cipher is used in the LB automatically by the DuploCloud software |

Access Control

|

PCI DSS Requirements v3.2.1 |

DuploCloud Implementation | |

|

Requirement 7: Restrict access to cardholder data by business need to know | ||

|

1. |

7.1.1 Define access needs for each role, including:

|

DuploCloud tenant model has access controls built in. This allows access to various tenant based on the user roles. This access control mechanism automatically integrates into the VPN client as well i.e. each user has a static IP in the VPN and based on his tenant access his IP is added to the respective tenant's SG in AWS/NSG in Azure. Tenant access policies will automatically apply SG or IAM based policy in AWS/NSG, or Managed Identity in Azure based on the resource type. |

|

7.2 Establish an access control system(s) for systems components that restricts access based on a user's need to know and is set to “deny all” unless specifically allowed. This access control system(s) must include the following |

User access to AWS/Azure console is granted based on tenant permissions and least privilege and a Just in time federated token that expires in less than an hour. Admins have privileged access and read-only user is another role | |

|

2. |

7.2.1 Coverage of all system components. |

AWS resource access is controlled based on IAM role, SG and static VPN client Ips/ Azure resource access in controlled by NSG, Managed Identity and static VPN client Ips that are all implicitly orchestrated and kept up to date |

|

3. |

7.2.3 Default deny-all setting. |

This is the default DuploCloud implementation of Sg and IAM roles in AWS/NSG and Managed Identity in Azure |

|

Requirement 8: Identify and authenticate access to system components | ||

|

4. |

8.1.1 Assign all users a unique ID before allowing them to access system components or cardholder data. |

DuploCloud integrates with client's IDP like G Suite and O365 for access to the portal. From there a federated logic in done for AWS/Azure resource access |

|

5. |

8.1.2 Control addition, deletion, and modification of user IDs, credentials, and other identifier objects. |

This is done at infra level in DuploCloud portal using single sign on |

|

6. |

8.1.3 Immediately revoke access for any terminated users. |

DuploCloud integrates with client's IDP like G Suite and O365 for access to the portal. The moment the email is disabled all access is revoked. Even if the user has say a private key to a VM even then he cannot connect because VPN will be deprovisioned |

|

7. |

8.1.4 Remove/disable inactive user accounts within 90 days. |

DuploCloud integrates with client's IDP like G Suite and O365 for access to the portal. The moment the email is disabled all access is revoke. Even if the user has say a private key to a VM even then he cannot connect because VPN will be deprovisioned |

|

8. |

8.1.5 Manage IDs used by third parties to access, support, or maintain system components via remote access as follows:

|

DuploCloud integrates by calling STS API to provide JIT token and URL |

|

9. |

8.1.6 Limit repeated access attempts by locking out the user ID after not more than six attempts. |

DuploCloud integrates with client's IDP like G Suite and O365 for access to the portal. When DuploCloud managed OpenVPN is used it is setup to lock the user out after failed attempts |

|

10. |

8.1.7 Set the lockout duration to a minimum of 30 minutes or until an administrator enables the user ID. |

DuploCloud integrates with client's IDP like G Suite and O365 for access to the portal. When DuploCloud managed OpenVPN is used it is setup to lock the user out after failed attempts. In Open VPN an admin has to unlock the user |

|

11. |

8.1.8 If a session has been idle for more than 15 minutes, require the user to re-authenticate to re-activate the terminal or session. |

DuploCloud single sign on has configurable timeout. For AWS/Azure resource access we provide JIT access |

|

12. |

8.2 In addition to assigning a unique ID, ensure proper user-authentication management for non-consumer users and administrators on all system components by employing at least one of the following methods to authenticate all users:

|

DuploCloud relies on the client's single sign on / IDP. If the user secures his corporate login using these controls then by virtue of single sign on, this get implemented in the infrastructure. |

|

13. |

8.2.1 Using strong cryptography, render all authentication credentials (such as passwords/phrases) unreadable during transmission and storage on all system components. |

Encryption at REST is done via AWS KMS/Azure KeyVault and in transit via SSL |

|

14. |

8.2.2 Verify user identity before modifying any authentication credential—for example, performing password resets, provisioning new tokens, or generating new keys. |

DuploCloud integrates with client's IDP like G Suite and O365 for access to the portal. |

|

15. |

8.2.3 Passwords/phrases must meet the following:

Alternatively, the passwords/phrases must have complexity and strength at least equivalent to the parameters specified above. |

Enforced by AWS/Azure and should be enforced by client's IDP. DuploCloud integrates with the IDP. The control should be implemented by the organization IDP. |

|

16. |

8.2.4 Change user passwords/passphrases at least every 90 days. |

DuploCloud integrates with client's IDP like G Suite and O365 for access to the portal. |

|

17. |

8.2.5 Do not allow an individual to submit a new password/phrase that is the same as any of the last four passwords/phrases he or she has used. |

Enforced by AWS/Azure and should be enforced by client's IDP. DuploCloud integrates with the IDP. The control should be implemented by the organization IDP. |

|

18. |

8.2.6 Set passwords/phrases for first time use and upon reset to a unique value for each user, and change immediately after the first use. |

Enforced by AWS/Azure and should be enforced by client's IDP. DuploCloud integrates with the IDP. The control should be implemented by the organization IDP. |

|

19. |

8.3 Secure all individual non-console administrative access and all remote access to the CDE using multi-factor authentication. Note: Multi-factor authentication requires that a minimum of two of the three authentication methods (see Requirement 8.2 for descriptions of authentication methods) be used for authentication. Using one factor twice (for example, using two separate passwords) is not considered multi-factor authentication. |

DuploCloud integrates with client's IDP like G Suite and O365 for access to the portal. Open VPN has MFA enabled |

|

20. |

8.3.1 Incorporate multi-factor authentication for all non-console access into the CDE for personnel with administrative access. |

DuploCloud integrates with client's IDP like G Suite and O365 for access to the portal. Open VPN has MFA enabled |

|

21. |

8.3.2 Incorporate multi-factor authentication for all remote network access (both user and administrator and including third-party access for support or maintenance) originating from outside the entity’s network. |

DuploCloud integrates with client's IDP like G Suite and O365 for access to the portal. Open VPN has MFA enabled |

|

22. |

8.7 All access to any database containing cardholder data (including access by applications, administrators, and all other users) is restricted as follows:

|

The IAM integration with database makes SQL connections also via Instance Profile. For users, individual JIT access is granted that lasts only 15 mins |

Post Provisioning Controls

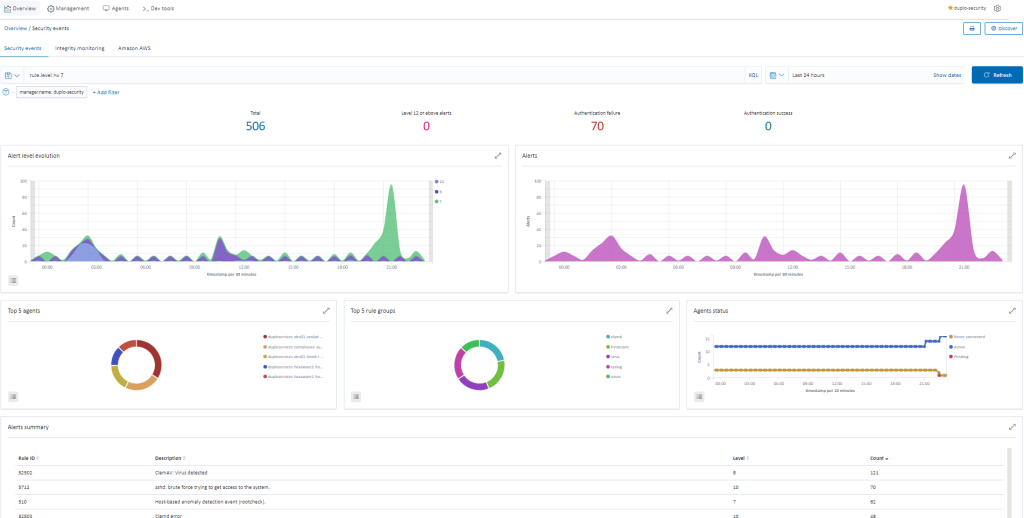

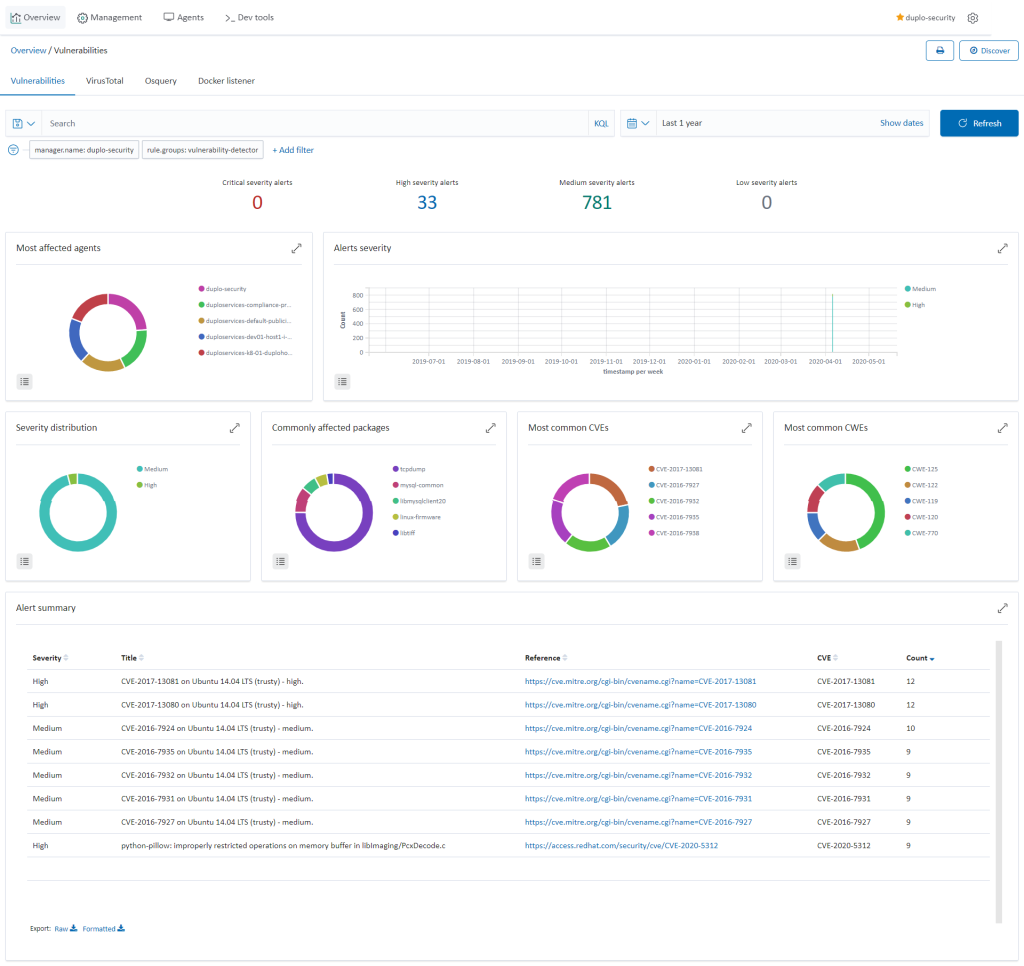

Vulnerability Detection (Requirement 6.1)

Agents collect the list of all installed applications and send it to the Wazuh master which compares with global vulnerability database using public OVAL CVE repositories. To check the vulnerabilities, go to “Security dashboard Vulnerabilities”. For more information on the implementation, refer to the Wazuh Vulnerability Detection Guide.

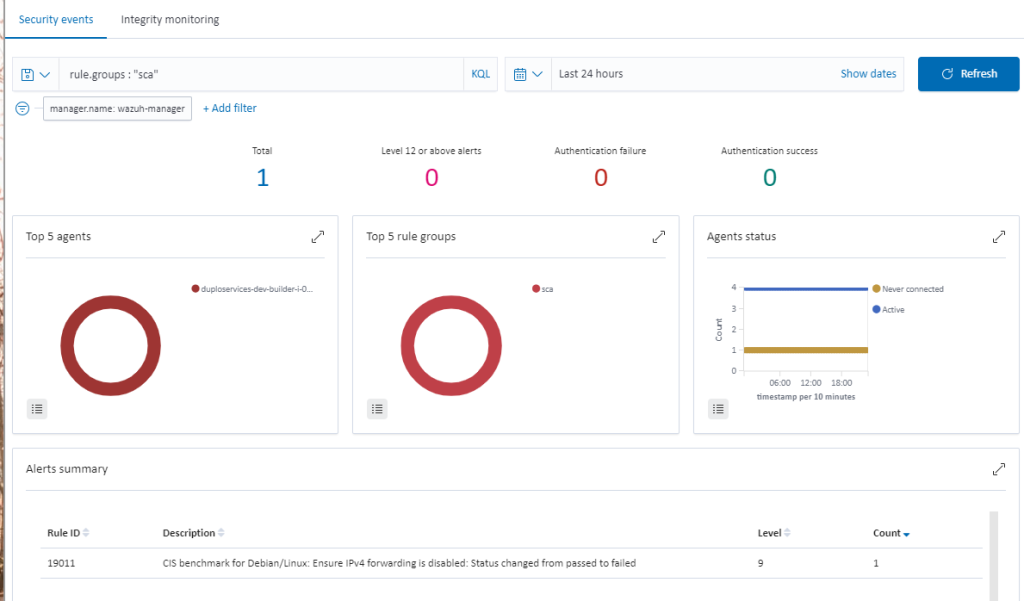

CIS Benchmarks (Requirement 1)

Wazuh provides the Security Configuration Assessment (SCA) module which offers the user the best possible experience when performing scans on hardening and configuration policies. To check the SCA report, go to “Security dashboard Security Events” and search for rule.groups: "sca". For more information, refer to the Wazuh SCA.

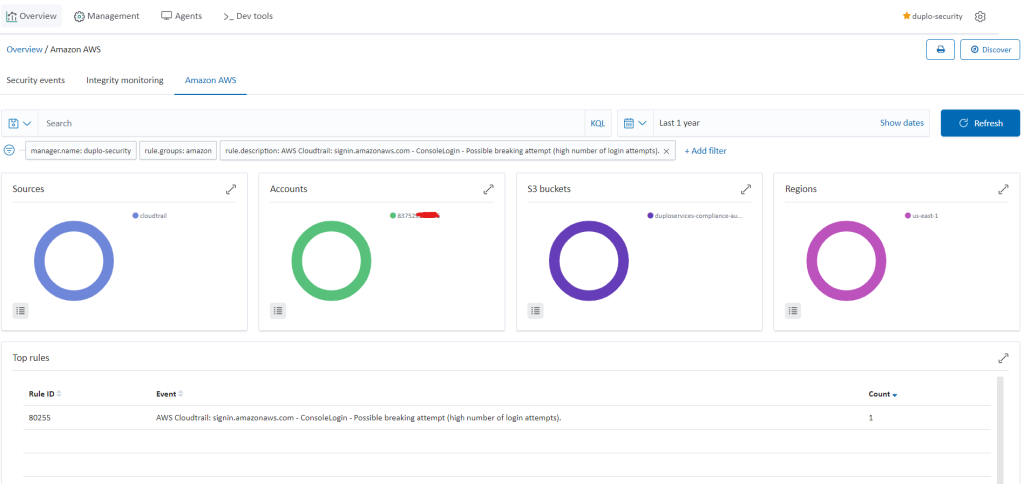

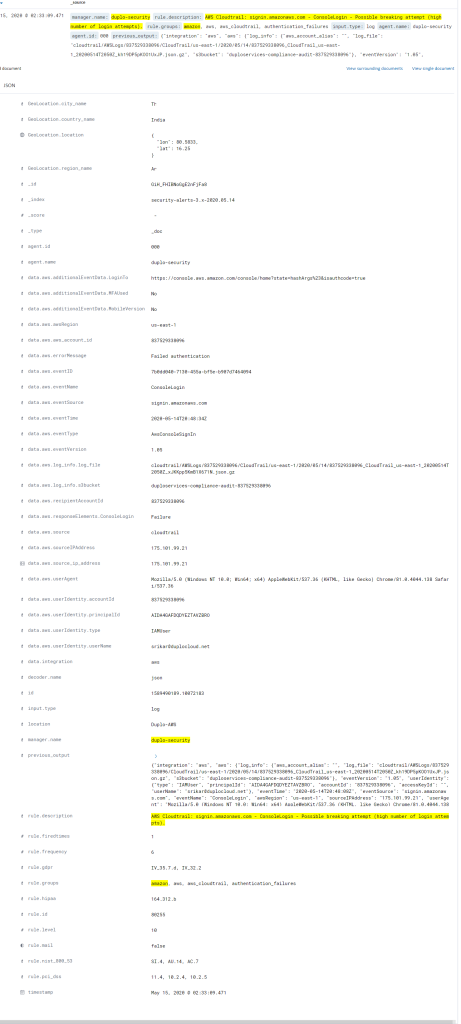

Cloud Vulnerabilities & Intrusion Detection (Requirement 11.4)

DuploCloud integrates and orchestrates AWS Inspector, CloudTrail, Trusted Advisor, VPC flow logs and GuardDuty. To view the alerts, go to “SIEM Dashboard Amazon AWS”.

Following is an example of an alert for a break-in attempt into AWS Console:

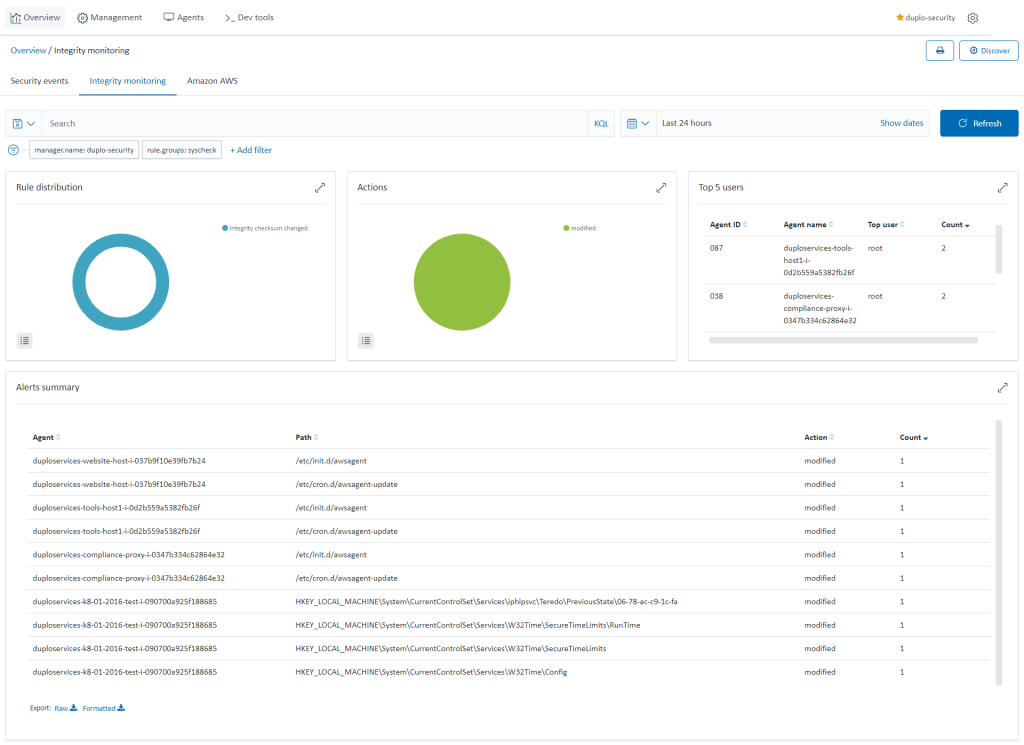

File Integrity Monitoring (Requirement 10.5.5)

Agents on the hosts will monitor the key files for any changes, verifying the checksum and attributes of the monitored files. The System Check will happen every 12 hours. To check the file integrity monitoring, go to “SIEM Dashboard Integrity Monitoring”. For more information, refer to the Wazuh Vulnerability Detection Guide.

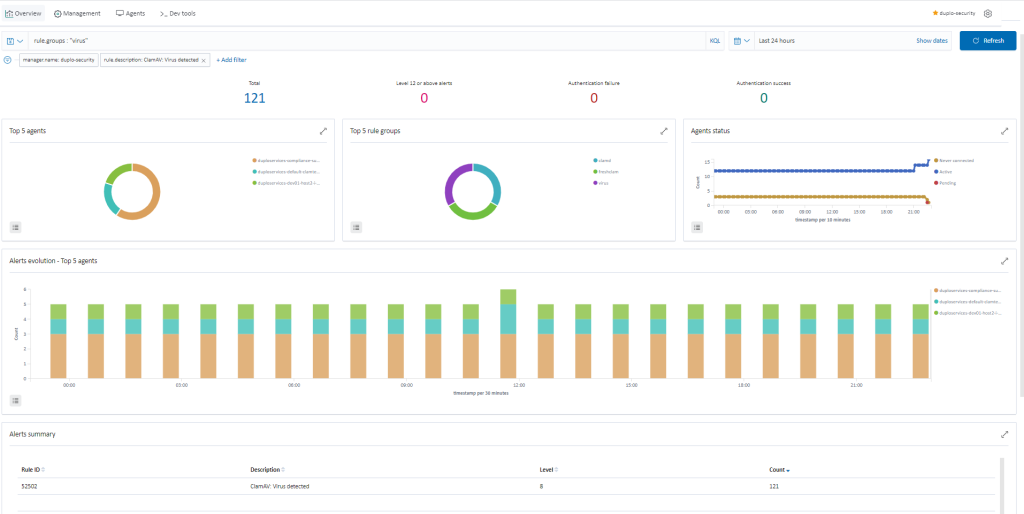

Virus Scanning (Requirements 5.1, 5.2 & 5.3)

DuploCloud enables ClamAV deployment via agent modules, with alerts collected and categorized in SIEM. Make sure the ClamAV agent module is enabled in DuploCloud. For more information on how to enable this, refer to the config in the “Other Agents” section. DuploCloud will make sure that the ClamAV agent is running and if it fails a fault is raised in the DuploCloud portal. To view the virus alerts, go to “SIEM Dashboard Security Events Add a filter (rule.groups: virus)”.

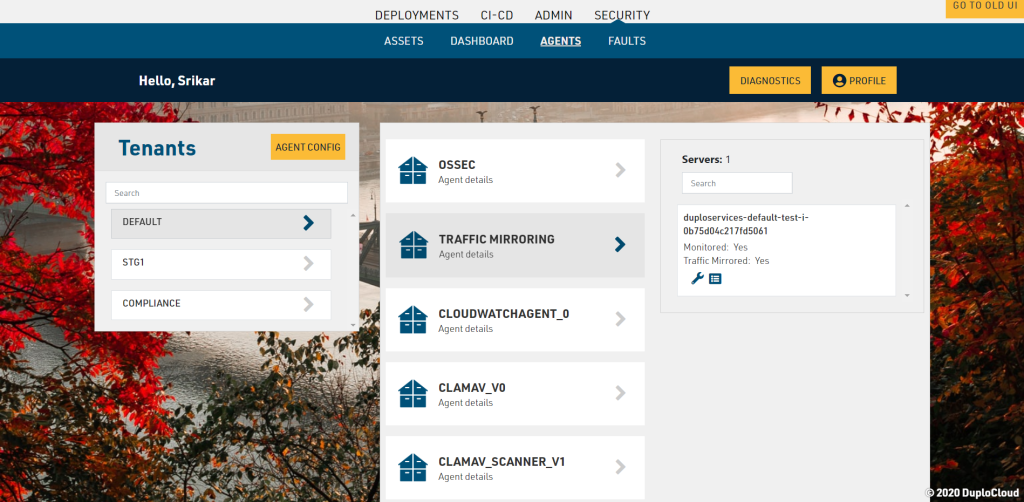

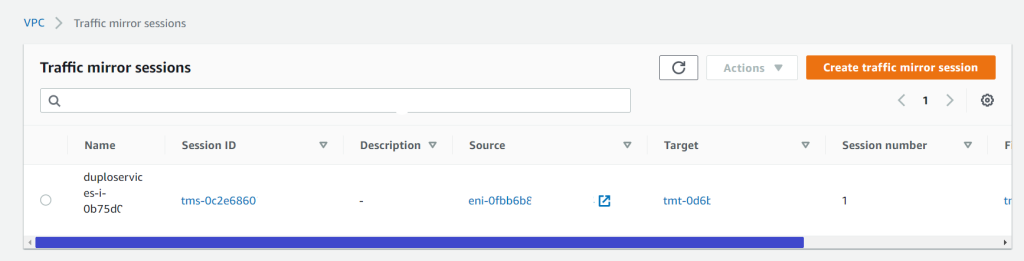

Network Intrusion Detection (Requirement 11.4)

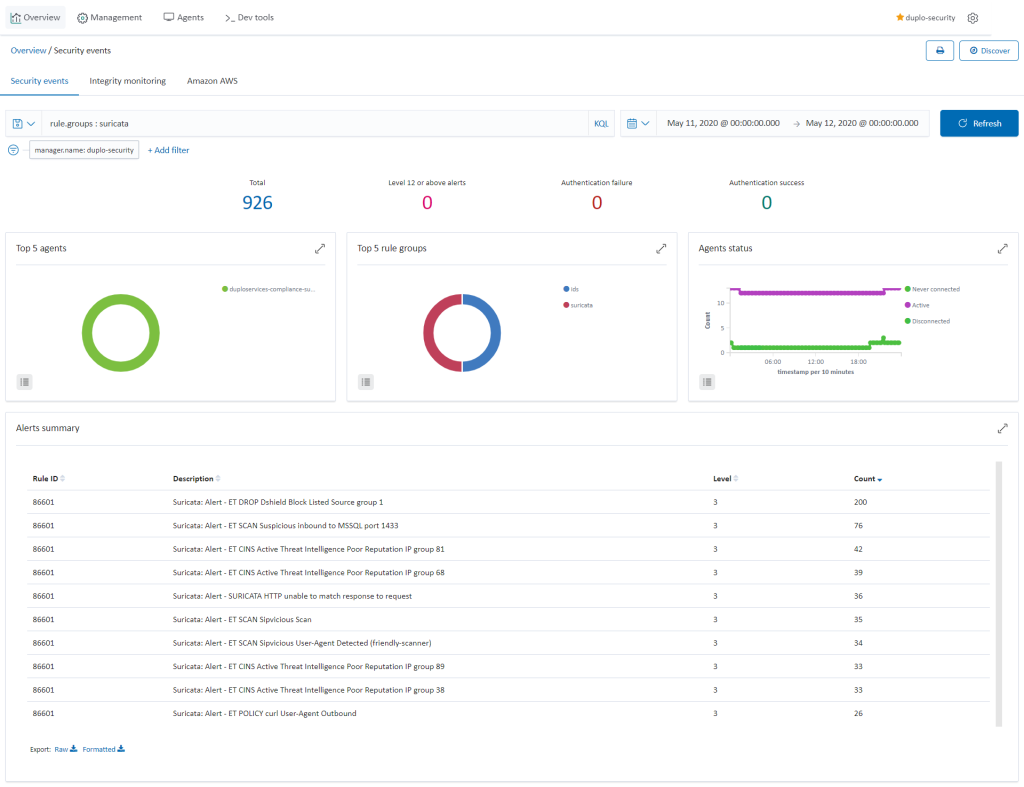

DuploCloud uses Suricata as a NIDS processing engine. The traffic mirroring capability in AWS is employed to not bog down the hosts with additional services. We spawn a host which has Suricata running, and it is the target of mirrored traffic from all hosts. Suricata analyses this traffic and produces results in files that are collected by Wazuh agents, then sent to the SIEM. To check the network vulnerabilities, go to “SIEM Dashboard Security Events Add in search (rule.groups: "suricata")”. Refer to AWS Traffic mirroring and Suricata.

Inventory Management (Requirement 11)

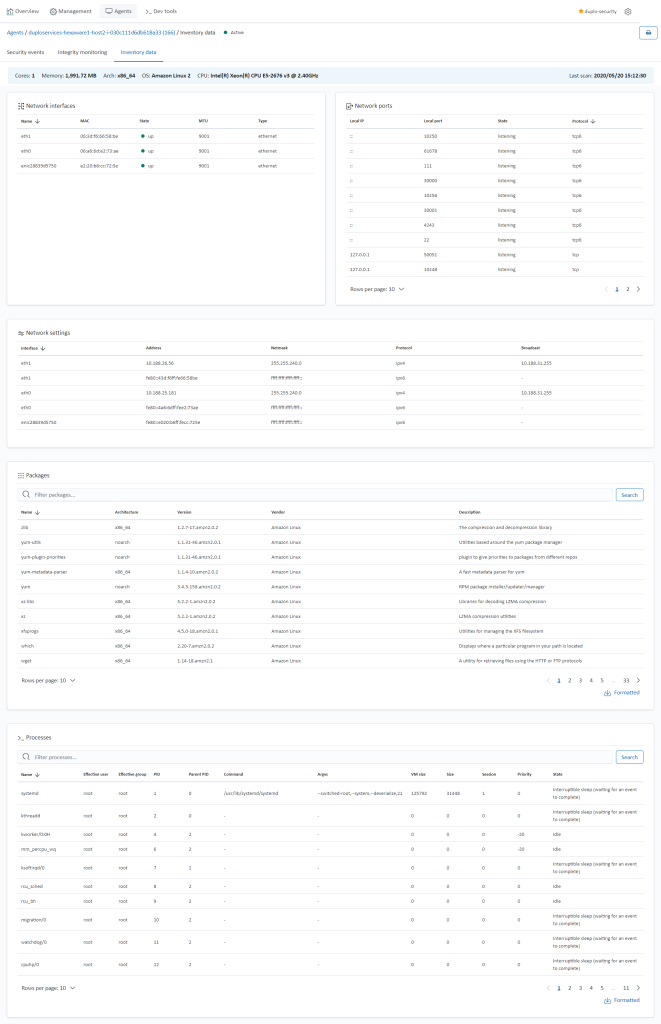

DuploCloud collects and stores inventory information from the Cloud infrastructure and at an operating system level from each host. It also has an inventory of all the Docker containers currently running in the server. For Cloud inventory, go to “Security Assets”, for Docker containers, look at “Admin Metrics” and for OS level inventory (Installed apps, network configuration, open ports, etc.), go to “SIEM Dashboard Agents Select agent of your choice Select inventory data”. For more information refer to System Inventory.

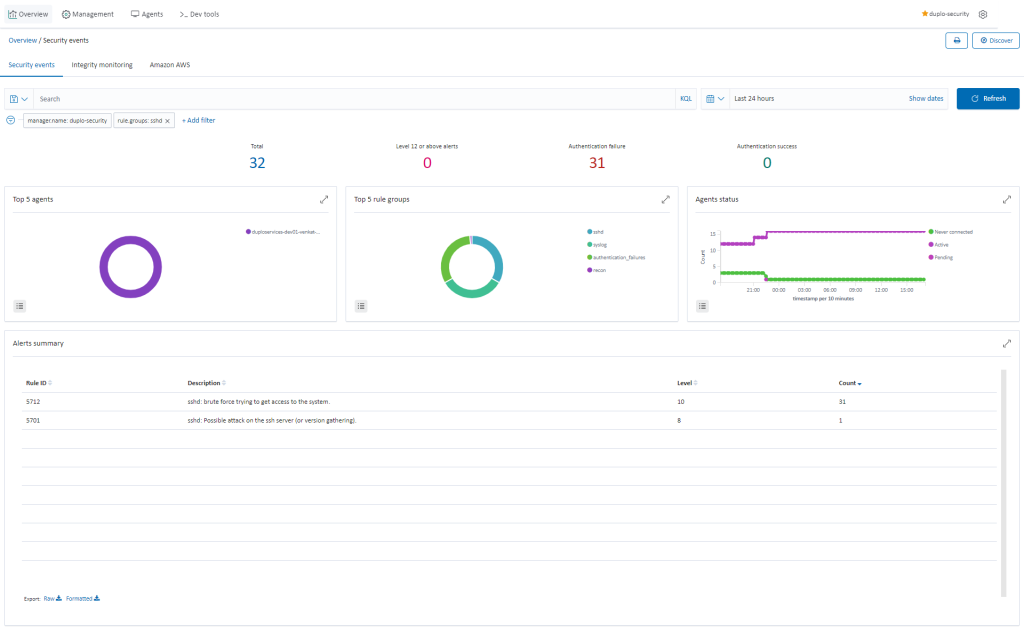

Host Intrusion Detection (Requirement 10.6.1)

Agents installed by DuploCloud will combine anomaly and signature-based technologies to detect intrusions or software misuse. They can also be used to monitor user activities, assess system configuration, and detect vulnerabilities.

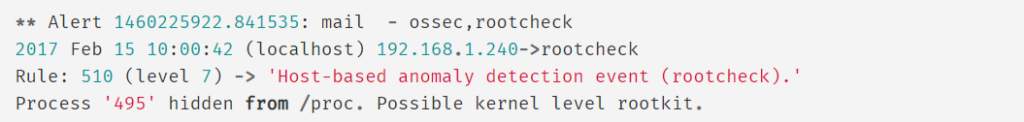

Host Anamoly Detection

Anomaly detection refers to the action of finding patterns in the system that do not match the expected behavior. Once malware (e.g., a rootkit) is installed on a system, it modifies the system to hide itself from the user. Although malware uses a variety of techniques to accomplish this, Wazuh uses a broad-spectrum approach to finding anomalous patterns that indicate possible intruders. This includes:

- File integrity monitoring

- Check running process

- Check hidden ports

- Check unusual files and permissions

- Check hidden files using system calls

- Scan the /dev directory

- Scan network interfaces

- Rootkit checks

FoFor more information refer to Wazuh Anomaly Detection.

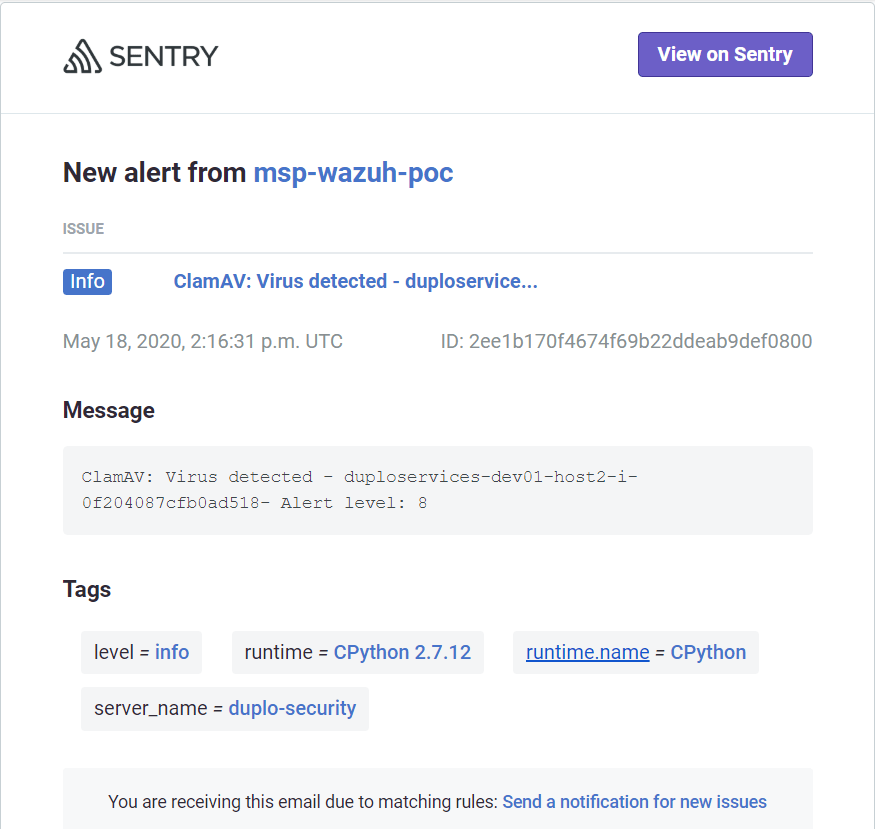

Email Alerting

DuploCloud extends Wazuh with an alerting module to send alerts to Sentry which in turn sends the email alerts. All the alerts above a configured level (default is 7) will be sent as an email to the configured users in Sentry.

Incident Management

Sentry has integration with Jira. All the events that come to Sentry can be configured to create incidents in Jira. For more information refer to Sentry Jira Integration.

Control-by-Control PCI Implementation Detail

|

PCI DSS Requirements v3.2.1 |

DuploCloud Implementation | |

|

1. |

1.1.4 Requirements for a firewall at each Internet connection and between any demilitarized zone (DMZ) and the Internal network zone |

Infrastructure is split into public and private subnets. Dev, stage, and production are split into different VPCs/VNETs. DuploCloud automation introduces a concept of a tenant which is a logical construct above AWS/Azure and represents an application's entire lifecycle. It is a security boundary implemented by having a unique SG, IAM Role and Instance Profile in AWS/ Subnet, NSG and Managed Identity in Azure per tenant. By default, no access is allowed into the tenant unless specific ports are exposed via LB. |

|

2. |

1.1.5 Description of groups, roles, and responsibilities for management of network components. |

DuploCloud overlays logical constructs of Tenant and infrastructure that represents an application. Within a tenant there are concepts of services. All resources within the tenant are by default labeled in the cloud with the Tenant name. Further the automation allows user to set any tag at a tenant level and that is automatically propagated to AWS/Azure artifacts. The system is always kept in sync with background threads |

|

3. |

1.2.1 Restrict inbound and outbound traffic to that which is necessary for the cardholder data environment, and specifically deny all other traffic. |

Infrastructure is split into public and private subnets. Dev, stage and production are split into different VPCs/VNETs. DuploCloud automation introduces a concept of a tenant which is a logical construct above AWS/Azure and represents an application's entire lifecycle. It is a security boundary implemented by having a unique SG, IAM Role and Instance Profile in AWS/ Subnet, NSG and Managed Identity in Azure per tenant. By default, no access is allowed into the tenant unless specific ports are exposed via LB. |

|

4. |

1.3.1 Implement a DMZ to limit inbound traffic to only system components that provide authorized publicly accessible services, protocols, and ports. |

Infrastructure is split into public and private subnets. Dev, stage, and production are split into different VPCs/VNETs. DuploCloud automation introduces a concept of a tenant which is a logical construct above AWS/Azure and represents an application's entire lifecycle. It is a security boundary implemented by having a unique SG, IAM Role and Instance Profile in AWS/ Subnet, NSG and Managed Identity in Azure per tenant. By default, no access is allowed into the tenant unless specific ports are exposed via ELB. |

|

5. |

1.3.2 Limit inbound Internet traffic to IP addresses within the DMZ. |

Infrastructure is split into public and private subnets. Dev, stage and production are split into different VPCs/VNETs. DuploCloud automation introduces a concept of a tenant which is a logical construct above AWS/Azure and represents an application's entire lifecycle. It is a security boundary implemented by having a unique SG, IAM Role and Instance Profile in AWS/ Subnet, NSG and Managed Identity in Azure per tenant. By default, no access is allowed into the tenant unless specific ports are exposed via ELB. |

|

6. |

1.3.4 Do not allow unauthorized outbound traffic from the cardholder data environment to the Internet. |

By default, all outbound traffic uses NAT Gateway. We can put in place additional subnet ACLs if needed. Nodes in the private subnets can only go outside only via a NAT Gateway. In Azure outbound can be blocked in the VNET |

|

7. |

1.3.6 Place system components that store cardholder data (such as a database) in an internal network zone, segregated from the DMZ and other untrusted networks. |

Infrastructure is split into public and private subnets. Dev, stage, and production are split into different VPCs/VNETs. DuploCloud automation introduces a concept of a tenant which is a logical construct above AWS/Azure and represents an application's entire lifecycle. It is a security boundary implemented by having a unique SG, IAM Role and Instance Profile in AWS/ a Subnet, NSG and Managed Identity in Azure per tenant. By default, no access is allowed into the tenant unless specific ports are exposed via LB. The application is split into multiple tenants with each tenants having all private resources in a private subnet. An example implementation would be all data stores are in one tenant and frontend UI is in a different tenant |

|

8. |

1.3.7 Do not disclose private IP addresses and routing information to unauthorized parties. |

Use Private subnets and private R53 hosted zones/Private Azure DNS zones |

|

9. |

1.5 Ensure that security policies and operational procedures for managing firewalls are documented, in use, and known to all affected parties |

Usage of a rules-based approach makes the configuration error free, consistent and documented. Further documentation is to be done by the client and we also put in documentation during the blue printing process |

|

10. |

2.1 Always change vendor-supplied defaults and remove or disable unnecessary default accounts before installing a system on the network. This applies to ALL default passwords, including but not limited to those used by operating systems, software that provides security services, application and system accounts, point-of-sale (POS) terminals, Simple Network Management Protocol (SNMP) community strings, etc.) |

DuploCloud enables user specified password or random password generation options. User access is managed in such a way that all end user access is via single sign on and password less. Even access to AWS/Azure console is done by generating a federated console URL that has a validity of less than an hour. The system enables operations with minimal user accounts as most access is JIT |

|

11. |

2.2.1 Implement only one primary function per server to prevent functions that require different security levels from co-existing on the same server. (For example, web servers, database servers, and DNS should be implemented on separate servers.) Note: Where virtualization technologies are in use, implement only one primary function per virtual system component. |

DuploCloud orchestrates K8 node selectors for this and supports non container workloads and allows labeling of VMs and achieve this. For non-container workloads are also supported and hence allows automation to meet this controls. For example, one can install Wazuh in one VM, Suricata in another and Elastic Search in another |

|

12. |

2.2.2 Enable only necessary services, protocols, daemons, etc., as required for the function of the system. |

By default, no traffic is allowed inside a tenant boundary unless exposed via an LB. DuploCloud allows automated configuration of desired inter-tenant access w/o users needing to manually write scripts. Further as the env changes dynamically DuploCloud keys these configs in sync. DuploCloud also reconciles any orphan resources in the system and cleans them up, this includes docker containers, VMs, LBs, keys, S3 buckets and various other resources |

|

13. |

2.2.3 Implement additional security features for any required services, protocols, or daemons that are insecure. Note: Where SSL/early TLS is used, the requirements in Appendix A2 must be completed. |

DC gets certificates from Cert-Manager and automates SSL termination in the LB |

|

14. |

2.2.4 Configure system security parameters to prevent misuse. |

IAM configuration and policies in AWS/ Managed Identities in Azure that implement separation of duties and least privilege, S3 bucket policies. Infrastructure is split into public and private subnets. Dev, stage, and production are split into different VPCs/VNETs. DuploCloud automation introduces a concept of a tenant which is a logical construct above AWS/Azure and represents an application's entire lifecycle. It is a security boundary implemented by having a unique SG, IAM Role and Instance Profile in AWS/ a Subnet, NSG and Managed Identity in Azure per tenant. By default, no access is allowed into the tenant unless specific ports are exposed via LB. The application is split into multiple tenants with each tenants having all private resources in a private subnet. An example implementation would be all data stores are in one tenant and frontend UI is in a different tenant |

|

15. |

2.2.5 Remove all unnecessary functionality, such as scripts, drivers, features, subsystems, file systems, and unnecessary web servers. |

DuploCloud reconciles any orphan resources in the system against the user specifications in its database and cleans them up, this includes docker containers, VMs, LBs, keys, S3 buckets and various other resources. All resources specified by the user in the database are tracked and audited every 30 seconds |

|

16. |

2.3 Encrypt all non-console administrative access using strong cryptography. |

SSL LB and VPN connections are orchestrated. DuploCloud automates OpenVPN P2S VPN user management by integrating it with user's single sign on i.e., when a user’s email is revoked from DuploCloud portal, it is cleaned up automatically from the VPN server |

|

17. |

2.4 Maintain an inventory of system components that are in scope for PCI DSS. |

All resources are stored in DB, tracked, and audited. The software has an inventory of resources that can be exported |

|

Requirement 3: Protect stored cardholder data | ||

|

18. |

3.4.1 If disk encryption is used (rather than file- or column-level database encryption), logical access must be managed separately and independently of native operating system authentication and access control mechanisms (for example, by not using local user account databases or general network login credentials). Decryption keys must not be associated with user accounts. Note: This requirement applies in addition to all other PCI DSS encryption and key-management requirements. |

DuploCloud orchestrates AWS KMS/Azure Key Vault keys per tenant to encrypt various AWS/Azure resource in that tenant like DBs, S3, Elastic Search, REDIS etc. Access to the keys is granted only to the instance profile w/o any user accounts or keys. By default, DuploCloud creates a common key per deployment but allows ability to have one key per tenant |

|

19. |

3.5.2 Restrict access to cryptographic keys to the fewest number of custodians necessary. |

DuploCloud orchestrates AWS KMS/Azure Key Vault keys per tenant to encrypt various AWS/Azure resource in that tenant like DBs, S3, Elastic Search, REDIS etc. Access to the keys is granted only to the instance profile w/o any user accounts or keys. By default, DuploCloud creates a common key per deployment but allows ability to have one key per tenant |

|

20. |

3.5.3 Store secret and private keys used to encrypt/decrypt cardholder data in one (or more) of the following forms at all times:

Note: It is not required that public keys be stored in one of these forms. |

DuploCloud orchestrates AWS KMS/Azure Key Vault for this and that in turns provides this control that we inherit |

|

Requirement 4: Encrypt transmission of cardholder data across open, public networks | ||

|

21. |

4.1 Use strong cryptography and security protocols to safeguard sensitive cardholder data during transmission over open, public networks, including the following:

Note: Where SSL/early TLS is used, the requirements in Appendix A2 must be completed. Examples of open, public networks include but are not limited to:

|

In the secure infrastructure blueprint we adopt Application Load Balancers with HTTPS listeners. HTTP listeners forwarded to HTTPS. The latest cipher is used in the LB automatically by the DuploCloud software |

|

Requirement 5: Protect all systems against malware and regularly update anti-virus software or programs | ||

|

22. |

5.1.1 Ensure that all anti-virus programs are capable of detecting, removing, and protecting against all known types of malicious software. |

DuploCloud enables ClamAV deployment via agent modules and alerts are collected in Wazuh |

|

23. |

5.1.2 For systems considered to be not commonly affected by malicious software, perform periodic evaluations to identify and evaluate evolving malware threats in order to confirm whether such systems continue to not require anti-virus software. |

DuploCloud agent modules can be enabled |

|

24. |

5.2 Ensure that all anti-virus mechanisms are maintained as follows:

|

DuploCloud enables ClamAV deployment via agent modules and alerts are collected in Wazuh. |

|

25 |

5.3 Ensure that anti-virus mechanisms are actively running and cannot be disabled or altered by users, unless specifically authorized by management on a case-by-case basis for a limited time period. Note: Anti-virus solutions may be temporarily disabled only if there is legitimate technical need, as authorized by management on a case-by-case basis. If anti-virus protection needs to be disabled for a specific purpose, it must be formally authorized. Additional security measures may also need to be implemented for the period of time during which anti-virus protection is not active. |

DuploCloud agent modules do thousand raise an alert if a service is not running |

|

Requirement 6: Develop and maintain secure systems and applications | ||

|

26. |

6.1 Establish a process to identify security vulnerabilities, using reputable outside sources for security vulnerability information, and assign a risk ranking (for example, as “high,” “medium,” or “low”) to newly discovered security vulnerabilities. Note: Risk rankings should be based on industry best practices as well as consideration of potential impact. For example, criteria for ranking vulnerabilities may include consideration of the CVSS base score, and/or the classification by the vendor, and/or type of systems affected. Methods for evaluating vulnerabilities and assigning risk ratings will vary based on an organization’s environment and risk assessment strategy. Risk rankings should, at a minimum, identify all vulnerabilities considered to be a “high risk” to the environment. In addition to the risk ranking, vulnerabilities may be considered “critical” if they pose an imminent threat to the environment, impact critical systems, and/or would result in a potential compromise if not addressed. Examples of critical systems may include security systems, public-facing devices and systems, databases, and other systems that store, process, or transmit cardholder data. |

DuploCloud installs by default Wazuh agent and AWS Inspector and any other Agent modules in all VMs and keeps them active. In case any node is failing the auto install DC raises an alarm. In Wazuh the alerts are configured and generated. We rely on customer’s SOC team to act on the alerts. DuploCloud team is the second line of defense if the issue cannot be addressed by client team |

|

27. |

6.2 Ensure that all system components and software are protected from known vulnerabilities by installing applicable vendor supplied security patches. Install critical security patches within one month of release. Note: Critical security patches should be identified according to the risk ranking process defined in Requirement 6.1. |

Patch management is done as part of DuploCloud SOC offering |

|

28. |

6.3.2 Review custom code prior to release to production or customers in order to identify any potential coding vulnerability (using either manual or automated processes) to include at least the following:

Note: This requirement for code reviews applies to all custom code (both internal and public-facing), as part of the system development life cycle. Code reviews can be conducted by knowledgeable internal personnel or third parties. Public-facing web applications are also subject to additional controls, to address ongoing threats and vulnerabilities after implementation, as defined at PCI DSS Requirement 6.6. |

DuploCloud's CI/CD offering provides an out-of-box integration with SonarQube that can be integrated into the pipeline to scan the code. |

|

Requirement 7: Restrict access to cardholder data by business need to know | ||

|

29. |

7.1.1 Define access needs for each role, including:

|

DuploCloud tenant model has access controls built in. This allows access to various tenant based on the user roles. This access control mechanism automatically integrates into the VPN client as well i.e. each user has a static IP in the VPN and based on his tenant access his IP is added to the respective tenant's SG in AWS/NSG in Azure. Tenant access policies will automatically apply SG or IAM based policy in AWS/NSG, or Managed Identity in Azure based on the resource type. |

|

7.2 Establish an access control system(s) for systems components that restricts access based on a user's need to know and is set to “deny all” unless specifically allowed. This access control system(s) must include the following |

User access to AWS/Azure console is granted based on tenant permissions and least privilege and a Just in time federated token that expires in less than an hour. Admins have privileged access and read-only user is another role | |

|

30. |

7.2.1 Coverage of all system components. |

AWS resource access is controlled based on IAM role, SG and static VPN client Ips/ Azure resource access in controlled by NSG, Managed Identity and static VPN client Ips that are all implicitly orchestrated and kept up to date |

|

31. |

7.2.3 Default deny-all setting. |

This is the default DuploCloud implementation of Sg and IAM roles in AWS/NSG and Managed Identity in Azure |

|

Requirement 8: Identify and authenticate access to system components | ||

|

32. |

8.1.1 Assign all users a unique ID before allowing them to access system components or cardholder data. |

DuploCloud integrates with client's IDP like G Suite and O365 for access to the portal. From there a federated logic in done for AWS/Azure resource access |

|

33. |

8.1.2 Control addition, deletion, and modification of user IDs, credentials, and other identifier objects. |

This is done at infra level in DuploCloud portal using single sign on |

|

34. |

8.1.3 Immediately revoke access for any terminated users. |

DuploCloud integrates with client's IDP like G Suite and O365 for access to the portal. The moment the email is disabled all access is revoked. Even if the user has say a private key to a VM even then he cannot connect because VPN will be deprovisioned |

|

35. |

8.1.4 Remove/disable inactive user accounts within 90 days. |

DuploCloud integrates with client's IDP like G Suite and O365 for access to the portal. The moment the email is disabled all access is revoke. Even if the user has say a private key to a VM even then he cannot connect because VPN will be deprovisioned |

|

36. |

8.1.5 Manage IDs used by third parties to access, support, or maintain system components via remote access as follows:

|

DuploCloud integrates by calling STS API to provide JIT token and URL |

|

37. |

8.1.6 Limit repeated access attempts by locking out the user ID after not more than six attempts. |

DuploCloud integrates with client's IDP like G Suite and O365 for access to the portal. When DuploCloud managed OpenVPN is used it is setup to lock the user out after failed attempts |

|

38. |

8.1.7 Set the lockout duration to a minimum of 30 minutes or until an administrator enables the user ID. |

DuploCloud integrates with client's IDP like G Suite and O365 for access to the portal. When DuploCloud managed OpenVPN is used it is setup to lock the user out after failed attempts. In Open VPN an admin must unlock the user |

|

39. |

8.1.8 If a session has been idle for more than 15 minutes, require the user to re-authenticate to re-activate the terminal or session. |

DuploCloud single sign on has configurable timeout. For AWS/Azure resource access we provide JIT access |

|

40. |

8.2 In addition to assigning a unique ID, ensure proper user-authentication management for non-consumer users and administrators on all system components by employing at least one of the following methods to authenticate all users:

|

DuploCloud relies on the client's single sign on / IDP. If the user secures his corporate login using these controls, then by virtue of single sign on, this get implemented in the infrastructure. |

|

41. |

8.2.1 Using strong cryptography, render all authentication credentials (such as passwords/phrases) unreadable during transmission and storage on all system components. |

Encryption at REST is done via AWS KMS/Azure KeyVault and in transit via SSL |

|

42. |

8.2.2 Verify user identity before modifying any authentication credential—for example, performing password resets, provisioning new tokens, or generating new keys. |

DuploCloud integrates with client's IDP like G Suite and O365 for access to the portal. |

|

43. |

8.2.3 Passwords/phrases must meet the following:

Alternatively, the passwords/phrases must have complexity and strength at least equivalent to the parameters specified above. |

Enforced by AWS/Azure and should be enforced by client's IDP. DuploCloud integrates with the IDP. The control should be implemented by the organization IDP. |

|

44. |

8.2.4 Change user passwords/passphrases at least every 90 days. |

DuploCloud integrates with client's IDP like G Suite and O365 for access to the portal. |

|

45. |

8.2.5 Do not allow an individual to submit a new password/phrase that is the same as any of the last four passwords/phrases he or she has used. |

Enforced by AWS/Azure and should be enforced by client's IDP. DuploCloud integrates with the IDP. The control should be implemented by the organization IDP. |

|

46. |

8.2.6 Set passwords/phrases for first time use and upon reset to a unique value for each user and change immediately after the first use. |

Enforced by AWS/Azure and should be enforced by client's IDP. DuploCloud integrates with the IDP. The control should be implemented by the organization IDP, |

|

47. |

8.3 Secure all individual non-console administrative access and all remote access to the CDE using multi-factor authentication. Note: Multi-factor authentication requires that a minimum of two of the three authentication methods (see Requirement 8.2 for descriptions of authentication methods) be used for authentication. Using one factor twice (for example, using two separate passwords) is not considered multi-factor authentication. |

DuploCloud integrates with client's IDP like G Suite and O365 for access to the portal. Open VPN has MFA enabled |

|

48. |

8.3.1 Incorporate multi-factor authentication for all non-console access into the CDE for personnel with administrative access. |

DuploCloud integrates with client's IDP like G Suite and O365 for access to the portal. Open VPN has MFA enabled |

|

49. |

8.3.2 Incorporate multi-factor authentication for all remote network access (both user and administrator and including third-party access for support or maintenance) originating from outside the entity’s network. |

DuploCloud integrates with client's IDP like G Suite and O365 for access to the portal. Open VPN has MFA enabled |

|

50. |

8.7 All access to any database containing cardholder data (including access by applications, administrators, and all other users) is restricted as follows:

|

The IAM integration with database makes SQL connections also via Instance Profile. For users, individual JIT access is granted that lasts only 15 mins |

|

Requirement 10: Track and monitor all access to network resources and cardholder data | ||

|

51. |

10.1 Implement audit trails to link all access to system components to each individual user. |

DuploCloud maintains trails in 2 places in addition to cloud trail. It logs all write events about infrastructure change in an ELK cluster. Further Wazuh agent tracks all activities at the host level. All 3 - Cloud trail, audit and Wazuh agent events are brought together in the Wazuh dashboard |

|

52. |

10.2.1 All individual user accesses to cardholder data. |

Infrastructure updates are audited and stored in ELK. Access to DB is through JIT access. SSH access to VMs are done via Wazuh syslog collection |

|

52. |

10.2.2 All actions taken by any individual with root or administrative privileges. |

Infrastructure updates are audited and stored in ELK. Access to DB is through JIT access. SSh access to VMs are done via Wazuh syslog collection |

|

53. |

10.2.3 Access to all audit trails. |

Infrastructure updates are audited and stored in ELK. Access to DB is through JIT access. SSh access to VMs are done via Wazuh syslog collection |

|

54. |

10.2.4 Invalid logical access attempts. |

Cloud trails and syslog hold this information which is stored in the centralized SIEM (Wazuh) |

|

55. |

10.2 5 Use of and changes to identification and authentication mechanisms—including but not limited to creation of new accounts and elevation of privileges—and all changes, additions, or deletions to accounts with root or administrative privileges. |

Infrastructure updates are audited and stored in ELK. Access to DB is through JIT access. SSh access to VMs are done via Wazuh syslog collection |

|

56. |

10.2.6 Initialization, stopping, or pausing of the audit logs |

AWS IAM policies prevent start/stop of AWS CloudTrail, S3 bucket policies protect access to log data, alerts are sent if AWS CloudTrail is disabled, AWS Config rule provides monitoring of AWS CloudTrail enabled |

|

57. |

10.2.7 Creation and deletion of system level objects |

DuploCloud maintains trails in 2 places in addition to cloud trail. It logs all write events about infrastructure change in an ELK cluster. Further, Wazuh agent tracks all activities at the host level. All 3 - Cloud trail, audit and Wazuh agent events are brought together in the Wazuh dashboard |

|

10.3 Record at least the following audit trail entries for all system components for each event: | ||

|

58 |

10.3.1 User identification. |

DuploCloud maintains trails in 2 places in addition to cloud trail. It logs all write events about infrastructure change in an ELK cluster. Further, Wazuh agent tracks all activities at the host level. All 3 - Cloud trail, audit and Wazuh agent events are brought together in the Wazuh dashboard |

|

59. |

10.3.2 Type of event. |

DuploCloud maintains trails in 2 places in addition to cloud trail. It logs all write events about infrastructure change in an ELK cluster. Further, Wazuh agent tracks all activities at the host level. All 3 - Cloud trail, audit and Wazuh agent events are brought together in the Wazuh dashboard |

|

60. |

10.3.3 Date and time. |

DuploCloud maintains trails in 2 places in addition to cloud trail. It logs all write events about infrastructure change in an ELK cluster. Further, Wazuh agent tracks all activities at the host level. All 3 - Cloud trail, audit and Wazuh agent events are brought together in the Wazuh dashboard |

|

61. |

10.3.4 Success or failure indication. |

DuploCloud maintains trails in 2 places in addition to cloud trail. It logs all write events about infrastructure change in an ELK cluster. Further, Wazuh agent tracks all activities at the host level. All 3 - Cloud trail, audit and Wazuh agent events are brought together in the Wazuh dashboard |

|

62. |

10.3.5 Origination of event. |

DuploCloud maintains trails in 2 places in addition to cloud trail. It logs all write events about infrastructure change in an ELK cluster. Further, Wazuh agent tracks all activities at the host level. All 3 - Cloud trail, audit and Wazuh agent events are brought together in the Wazuh dashboard |

|

63. |

10.3.6 Identity or name of affected data, system component, or resource. |

DuploCloud maintains trails in 2 places in addition to cloud trail. It logs all write events about infrastructure change in an ELK cluster. Further, Wazuh agent tracks all activities at the host level. All 3 - Cloud trail, audit and Wazuh agent events are brought together in the Wazuh dashboard |

|

64. |

10.4 Using time-synchronization technology, synchronize all critical system clocks and times and ensure that the following is implemented for acquiring, distributing, and storing time. Note: One example of time synchronization technology is Network Time Protocol (NTP). |

All instances launched in VPC are synced with NTP. User data is injected for time sync |

|

65. |

10.4.1 Critical systems have the correct and consistent time. |

All instances launched in VPC are synced with NTP using user data that is implicitly added. All log data has timestamp provided by NTP |

|

66. |

10.4.3 Time settings are received from industry-accepted time sources. |

All instances launched in VPC are synced with AWS NTP servers which in turn obtain time from NTP.org |

|

67. |

10.5.1 Limit viewing of audit trails to those with a job-related need. |

Audit trails views access are part of the DuploCloud Access controls |

|

68. |

10.5.2 Protect audit trail files from unauthorized modifications. |

Cloud trails policies are set in place. Wazuh and ELK access is limited to admins |

|

69. |

10.5.3 Promptly back up audit trail files to a centralized log server or media that is difficult to alter. |

This is done automatically by DuploCloud |

|

70. |

10.5.4 Write logs for external-facing technologies onto a secure, centralized, internal log server or media device. |

DuploCloud maintains trails in 2 places in addition to cloud trail. It logs all write events about infrastructure change in an ELK cluster. Further, Wazuh agent tracks all activities at the host level. All 3 - Cloud trail, audit and Wazuh agent events are brought together in the Wazuh dashboard |

|

71. |

10.5.5 Use file integrity monitoring or change-detection software on logs to ensure that existing log data cannot be changed without generating alerts (although new data being added should not cause an alert). |

We stored Cloud trail data in a separate AWS account. Wazuh has FIM |

|

72. |

10.6.1 Review the following at least daily:

|

Done by DuploCloud SOC Team |

|

73. |

10.7 Retain audit trail history for at least one year, with a minimum of three months immediately available for analysis (for example, online, archived, or restorable from backup). |

DuploCloud automatically snapshots the SIEM after the indexes grow beyond a certain size (minimum 3 months) and deletes the index in the running system. Any old index can be brought back in a few clicks. The indexes are per day which makes it straight forward to meet compliance guidelines like 3 months in this case |

|

Requirement 11: Regularly test security systems and processes | ||

|

74. |

11.2 Run internal and external network vulnerability scans at least quarterly and after any significant change in the network (such as new system component installations, changes in network topology, firewall rule modifications, product upgrades). Note: Multiple scan reports can be combined for the quarterly scan process to show that all systems were scanned and all applicable vulnerabilities have been addressed. Additional documentation may be required to verify non-remediated vulnerabilities are in the process of being addressed. For initial PCI DSS compliance, it is not required that four quarters of passing scans be completed if the assessor verifies 1) the most recent scan result was a passing scan, 2) the entity has documented policies and procedures requiring quarterly scanning, and 3) vulnerabilities noted in the scan results have been corrected as shown in a re-scan(s). For subsequent years after the initial PCI DSS review, four quarters of passing scans must have occurred. |

Offered as part of DuploCloud SOC |

|

75. |

11.2.1 Perform quarterly internal vulnerability scans. Address vulnerabilities and perform rescans to verify all “high risk” vulnerabilities are resolved in accordance with the entity’s vulnerability ranking (per Requirement 6.1). Scans must be performed by qualified personnel. |

Offered as part of DuploCloud SOC |

|

76. |

11.3.3 Exploitable vulnerabilities found during penetration testing are corrected and testing is repeated to verify the corrections. |

DuploCloud enables WAF rules to mitigate many of these vulnerabilities if the application change is less viable |

|

77. |

11.4 Use intrusion-detection and/or intrusion-prevention techniques to detect and/or prevent intrusions into the network. Monitor all traffic at the perimeter of the cardholder data environment as well as at critical points in the cardholder data environment, and alert personnel to suspected compromises. Keep all intrusion-detection and prevention engines, baselines, and signatures up to date. |

DuploCloud orchestrates AWS Traffic mirroring to send a copy of the traffic at all critical points (tenants) to a Suricata VM. From there the alerts are fetched by Wazuh and displayed in the central dashboard. This provides IDS but if prevention is desired then the Suricata software is enabled in each critical VM preferably in the AMI (Image) itself. The alerts are then fetched by the Wazuh agent and updated in Wazuh SIEM |

|

78. |

11.5 Deploy a change-detection mechanism (for example, file-integrity monitoring tools) to alert personnel to unauthorized modification of critical system files, configuration files, or content files; and configure the software to perform critical file comparisons at least weekly. Note: For change-detection purposes, critical files are usually those that do not regularly change, but the modification of which could indicate a system compromise or risk of compromise. Change-detection mechanisms such as file-integrity monitoring products usually come preconfigured with critical files for the related operating system. Other critical files, such as those for custom applications, must be evaluated and defined by the entity (that is, the merchant or service provider) |

DuploCloud orchestrates installation and update of Wazuh agent is all servers that are launched. Wazuh agent then performs FIM and raises alerts. The alerts will first be triaged by the client SOC team |

|

79. |

11.5.1 Implement a process to respond to any alerts generated by the change detection solution. |

DuploCloud SOC team will receive the email and operate as per the defined and approved Incident management solution |

Control-by-Control HIPAA Implementation Detail

| HIPAA Regulation Text | DuploCloud Implementation | |

| 1. | §164.306(a) Covered entities and business associates must do the following: (1) Ensure the confidentiality, integrity, and availability of all electronic protected health information the covered entity or business associate creates, receives, maintains, or transmits. (2) Protect against any reasonably anticipated threats or hazards to the security or integrity of such information. (3) Protect against any reasonably anticipated uses or disclosures of such information that are not permitted or required under subpart E of this part; and (4) Ensure compliance with this subpart by its workforce. | For data at rest DuploCloud orchestrates KMS keys per tenant to encrypt various AWS resource in that tenant like like RDS DBs, S3, Elastic Search, REDIS etc. For data in transit DuploCloud fetches the certificates from cert manager and all the requests can be made through TLS. |

| 2. | §164.308(a) A covered entity or business associate must in accordance with §164.306: (1)(i) Implement policies and procedures to prevent, detect, contain, and correct security violations. | Usage of a rules-based approach makes the configuration error free, consistent, and documented. In addition, DuploCloud also provides audit trails for any change in the system. |

| 3. | §164.308(a)(1)(ii)(A) Conduct an accurate and thorough assessment of the potential risks and vulnerabilities to the confidentiality, integrity, and availability of electronic protected health information held by the covered entity or business associate. | Inherited from AWS. |

| 4. | §164.308(a)(1)(ii)(B) Implement security measures sufficient to reduce risks and vulnerabilities to a reasonable and appropriate level to comply with §164.306(a). | Usage of a rules-based approach makes the configuration error free, consistent, and documented. Further documentation is to be done by the client and we also put in documentation during the blue printing process. |

| 5. | §164.308(a)(1)(ii)(D) Implement procedures to regularly review records of information system activity, such as audit logs, access reports, and security incident tracking reports. | DuploCloud maintains trails in 2 places in addition to cloud trail. It logs all write events about infrastructure change in an ELK cluster. Further, Wazuh agent tracks all activities at the host level. All 3 - Cloud trail, audit and Wazuh agent events are brought together in the Wazuh dashboard. |

| 6. | §164.308(a)(3)(i) Implement policies and procedures to ensure that all members of its workforce have appropriate access to electronic protected health information, as provided under paragraph (a)(4) of this section, and to prevent those workforce members who do not have access under paragraph (a)(4) of this section from obtaining access to electronic protected health information. | DuploCloud automation introduces a concept of a tenant which is a logical construct above AWS and represents an application's entire lifecycle. It is a security boundary implemented by having a unique SG, IAM Role and Instance Profile per tenant. By default, no access is allowed into the tenant unless specific ports are exposed via ELB. |

| 7. | §164.308(a)(5)(ii)(C) Procedures for monitoring log-in attempts and reporting discrepancies. | DuploCloud maintains trails in 2 places in addition to cloud trail. It logs all write events about infrastructure change in an ELK cluster. Further, Wazuh agent tracks all activities at the host level. All 3 - Cloud trail, audit and Wazuh agent events are brought together in the Wazuh dashboard. |

| 8. | §164.308(a)(5)(ii)(D) Procedures for creating, changing, and safeguarding passwords. | DuploCloud enables user specified password or random password generation options. User access is managed in such a way that all end user access is via single sign on and password less. Even access to AWS console is done by generating a federated console URL that has a validity of less than an hour. The system enables operations with minimal user accounts as most access is JIT. |